Full applications w/Bayes

Plus some ways of handling missing data

Daniel Anderson

Week 8

Data

nurses## # A tibble: 1,000 x 11## hospital ward wardid nurse age gender experien stress wardtype hospsize expcon ## <dbl> <dbl> <dbl> <dbl> <dbl> <chr> <dbl> <dbl> <chr> <chr> <chr> ## 1 1 1 11 1 36 Male 11 7 general care large experiment## 2 1 1 11 2 45 Male 20 7 general care large experiment## 3 1 1 11 3 32 Male 7 7 general care large experiment## 4 1 1 11 4 57 Female 25 6 general care large experiment## 5 1 1 11 5 46 Female 22 6 general care large experiment## 6 1 1 11 6 60 Female 22 6 general care large experiment## 7 1 1 11 7 23 Female 13 6 general care large experiment## 8 1 1 11 8 32 Female 13 7 general care large experiment## 9 1 1 11 9 60 Male 17 7 general care large experiment## 10 1 2 12 10 45 Male 21 6 special care large experiment## # … with 990 more rowsModel 1

Fit the following model

$$ \small \begin{aligned} \operatorname{stress}_{i} &\sim N \left(\alpha_{j[i]} + \beta_{1j[i]}(\operatorname{experien}), \sigma^2 \right) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{1j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{wardtype}_{\operatorname{special\ care}}) \\ &\gamma^{\beta_{1}}_{0} + \gamma^{\beta_{1}}_{1}(\operatorname{wardtype}_{\operatorname{special\ care}}) \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & \rho_{\alpha_{j}\beta_{1j}} \\ \rho_{\beta_{1j}\alpha_{j}} & \sigma^2_{\beta_{1j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \end{aligned} $$

02:00

Model 1

Fit the following model

$$ \small \begin{aligned} \operatorname{stress}_{i} &\sim N \left(\alpha_{j[i]} + \beta_{1j[i]}(\operatorname{experien}), \sigma^2 \right) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{1j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{wardtype}_{\operatorname{special\ care}}) \\ &\gamma^{\beta_{1}}_{0} + \gamma^{\beta_{1}}_{1}(\operatorname{wardtype}_{\operatorname{special\ care}}) \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & \rho_{\alpha_{j}\beta_{1j}} \\ \rho_{\beta_{1j}\alpha_{j}} & \sigma^2_{\beta_{1j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \end{aligned} $$

02:00

lmer(stress ~ experien * wardtype + (experien|wardid), data = nurses)# orlmer(stress ~ experien + wardtype + experien:wardtype + (experien|wardid), data = nurses)Model 2

Fit the following model

$$ \scriptsize \begin{aligned} \operatorname{stress}_{i} &\sim N \left(\alpha_{j[i],k[i]} + \beta_{1j[i],k[i]}(\operatorname{experien}), \sigma^2 \right) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{1j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{wardtype}_{\operatorname{special\ care}}) \\ &\gamma^{\beta_{1}}_{0} + \gamma^{\beta_{1}}_{1}(\operatorname{wardtype}_{\operatorname{special\ care}}) \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & \rho_{\alpha_{j}\beta_{1j}} \\ \rho_{\beta_{1j}\alpha_{j}} & \sigma^2_{\beta_{1j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{k} \\ &\beta_{1k} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{hospsize}_{\operatorname{medium}}) + \gamma_{2}^{\alpha}(\operatorname{hospsize}_{\operatorname{small}}) \\ &\mu_{\beta_{1k}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{k}} & \rho_{\alpha_{k}\beta_{1k}} \\ \rho_{\beta_{1k}\alpha_{k}} & \sigma^2_{\beta_{1k}} \end{array} \right) \right) \text{, for hospital k = 1,} \dots \text{,K} \end{aligned} $$

02:00

Model 2

Fit the following model

$$ \scriptsize \begin{aligned} \operatorname{stress}_{i} &\sim N \left(\alpha_{j[i],k[i]} + \beta_{1j[i],k[i]}(\operatorname{experien}), \sigma^2 \right) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{1j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{wardtype}_{\operatorname{special\ care}}) \\ &\gamma^{\beta_{1}}_{0} + \gamma^{\beta_{1}}_{1}(\operatorname{wardtype}_{\operatorname{special\ care}}) \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & \rho_{\alpha_{j}\beta_{1j}} \\ \rho_{\beta_{1j}\alpha_{j}} & \sigma^2_{\beta_{1j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{k} \\ &\beta_{1k} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{hospsize}_{\operatorname{medium}}) + \gamma_{2}^{\alpha}(\operatorname{hospsize}_{\operatorname{small}}) \\ &\mu_{\beta_{1k}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{k}} & \rho_{\alpha_{k}\beta_{1k}} \\ \rho_{\beta_{1k}\alpha_{k}} & \sigma^2_{\beta_{1k}} \end{array} \right) \right) \text{, for hospital k = 1,} \dots \text{,K} \end{aligned} $$

02:00

lmer(stress ~ experien * wardtype + hospsize + (experien|wardid) + (experien|hospital), data = nurses)Model 3

Fit the following model

$$ \small \begin{aligned} \operatorname{stress}_{i} &\sim N \left(\alpha_{j[i],k[i]} + \beta_{1j[i],k[i]}(\operatorname{experien}) + \beta_{2}(\operatorname{age}), \sigma^2 \right) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{1j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{expcon}_{\operatorname{experiment}}) \\ &\mu_{\beta_{1j}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & 0 \\ 0 & \sigma^2_{\beta_{1j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{k} \\ &\beta_{1k} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\mu_{\alpha_{k}} \\ &\mu_{\beta_{1k}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{k}} & 0 \\ 0 & \sigma^2_{\beta_{1k}} \end{array} \right) \right) \text{, for hospital k = 1,} \dots \text{,K} \end{aligned} $$

02:00

Model 3

Fit the following model

$$ \small \begin{aligned} \operatorname{stress}_{i} &\sim N \left(\alpha_{j[i],k[i]} + \beta_{1j[i],k[i]}(\operatorname{experien}) + \beta_{2}(\operatorname{age}), \sigma^2 \right) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{1j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{expcon}_{\operatorname{experiment}}) \\ &\mu_{\beta_{1j}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & 0 \\ 0 & \sigma^2_{\beta_{1j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{k} \\ &\beta_{1k} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\mu_{\alpha_{k}} \\ &\mu_{\beta_{1k}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{k}} & 0 \\ 0 & \sigma^2_{\beta_{1k}} \end{array} \right) \right) \text{, for hospital k = 1,} \dots \text{,K} \end{aligned} $$

02:00

lmer(stress ~ experien + age + expcon + (experien||wardid) + (experien||hospital), data = nurses)# orlmer(stress ~ experien + age + expcon + (1|wardid) + (0 + experien|wardid) + (1|hospital) + (0 + experien|hospital), data = nurses)Model 4

Fit the following model

$$ \scriptsize \begin{aligned} \operatorname{stress}_{i} &\sim N \left(\alpha_{j[i],k[i]} + \beta_{1j[i],k[i]}(\operatorname{experien}) + \beta_{2}(\operatorname{age}), \sigma^2 \right) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{1j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{expcon}_{\operatorname{experiment}}) + \gamma_{2}^{\alpha}(\operatorname{wardtype}_{\operatorname{special\ care}}) \\ &\mu_{\beta_{1j}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & \rho_{\alpha_{j}\beta_{1j}} \\ \rho_{\beta_{1j}\alpha_{j}} & \sigma^2_{\beta_{1j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{k} \\ &\beta_{1k} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{hospsize}_{\operatorname{medium}}) + \gamma_{2}^{\alpha}(\operatorname{hospsize}_{\operatorname{small}}) \\ &\gamma^{\beta_{1}}_{0} + \gamma^{\beta_{1}}_{1}(\operatorname{hospsize}_{\operatorname{medium}}) + \gamma^{\beta_{1}}_{2}(\operatorname{hospsize}_{\operatorname{small}}) \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{k}} & \rho_{\alpha_{k}\beta_{1k}} \\ \rho_{\beta_{1k}\alpha_{k}} & \sigma^2_{\beta_{1k}} \end{array} \right) \right) \text{, for hospital k = 1,} \dots \text{,K} \end{aligned} $$

02:00

Model 4

Fit the following model

$$ \scriptsize \begin{aligned} \operatorname{stress}_{i} &\sim N \left(\alpha_{j[i],k[i]} + \beta_{1j[i],k[i]}(\operatorname{experien}) + \beta_{2}(\operatorname{age}), \sigma^2 \right) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{1j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{expcon}_{\operatorname{experiment}}) + \gamma_{2}^{\alpha}(\operatorname{wardtype}_{\operatorname{special\ care}}) \\ &\mu_{\beta_{1j}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & \rho_{\alpha_{j}\beta_{1j}} \\ \rho_{\beta_{1j}\alpha_{j}} & \sigma^2_{\beta_{1j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{k} \\ &\beta_{1k} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{hospsize}_{\operatorname{medium}}) + \gamma_{2}^{\alpha}(\operatorname{hospsize}_{\operatorname{small}}) \\ &\gamma^{\beta_{1}}_{0} + \gamma^{\beta_{1}}_{1}(\operatorname{hospsize}_{\operatorname{medium}}) + \gamma^{\beta_{1}}_{2}(\operatorname{hospsize}_{\operatorname{small}}) \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{k}} & \rho_{\alpha_{k}\beta_{1k}} \\ \rho_{\beta_{1k}\alpha_{k}} & \sigma^2_{\beta_{1k}} \end{array} \right) \right) \text{, for hospital k = 1,} \dots \text{,K} \end{aligned} $$

02:00

lmer(stress ~ experien * hospsize + age + expcon + wardtype + (experien|wardid) + (experien|hospital), data = nurses)Model 5

Fit the following model

$$ \small \begin{aligned} \operatorname{expcon}_{i} &\sim \operatorname{Binomial}(n = 1, \operatorname{prob}_{\operatorname{expcon} = \operatorname{experiment}} = \widehat{P}) \\ \log\left[\frac{\hat{P}}{1 - \hat{P}} \right] &=\alpha_{j[i],k[i]} + \beta_{1j[i]}(\operatorname{age}) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{1j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\mu_{\alpha_{j}} \\ &\mu_{\beta_{1j}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & \rho_{\alpha_{j}\beta_{1j}} \\ \rho_{\beta_{1j}\alpha_{j}} & \sigma^2_{\beta_{1j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \\ \alpha_{k} &\sim N \left(\mu_{\alpha_{k}}, \sigma^2_{\alpha_{k}} \right) \text{, for hospital k = 1,} \dots \text{,K} \end{aligned} $$

03:00

Model 5

Fit the following model

$$ \small \begin{aligned} \operatorname{expcon}_{i} &\sim \operatorname{Binomial}(n = 1, \operatorname{prob}_{\operatorname{expcon} = \operatorname{experiment}} = \widehat{P}) \\ \log\left[\frac{\hat{P}}{1 - \hat{P}} \right] &=\alpha_{j[i],k[i]} + \beta_{1j[i]}(\operatorname{age}) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{1j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\mu_{\alpha_{j}} \\ &\mu_{\beta_{1j}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & \rho_{\alpha_{j}\beta_{1j}} \\ \rho_{\beta_{1j}\alpha_{j}} & \sigma^2_{\beta_{1j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \\ \alpha_{k} &\sim N \left(\mu_{\alpha_{k}}, \sigma^2_{\alpha_{k}} \right) \text{, for hospital k = 1,} \dots \text{,K} \end{aligned} $$

03:00

nurses <- nurses %>% mutate(expcon = factor(expcon))glmer(expcon ~ age + (age|wardid) + (1|hospital), data = nurses, family = binomial(link = "logit"), data = nurses)Model 6

Fit the following model

$$ \scriptsize \begin{aligned} \operatorname{expcon}_{i} &\sim \operatorname{Binomial}(n = 1, \operatorname{prob}_{\operatorname{expcon} = \operatorname{experiment}} = \widehat{P}) \\ \log\left[\frac{\hat{P}}{1 - \hat{P}} \right] &=\alpha_{j[i],k[i]} + \beta_{1j[i]}(\operatorname{age}) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{1j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{wardtype}_{\operatorname{special\ care}}) \\ &\mu_{\beta_{1j}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & \rho_{\alpha_{j}\beta_{1j}} \\ \rho_{\beta_{1j}\alpha_{j}} & \sigma^2_{\beta_{1j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \\ \alpha_{k} &\sim N \left(\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{hospsize}_{\operatorname{medium}}) + \gamma_{2}^{\alpha}(\operatorname{hospsize}_{\operatorname{small}}), \sigma^2_{\alpha_{k}} \right) \text{, for hospital k = 1,} \dots \text{,K} \end{aligned} $$

02:00

Model 6

Fit the following model

$$ \scriptsize \begin{aligned} \operatorname{expcon}_{i} &\sim \operatorname{Binomial}(n = 1, \operatorname{prob}_{\operatorname{expcon} = \operatorname{experiment}} = \widehat{P}) \\ \log\left[\frac{\hat{P}}{1 - \hat{P}} \right] &=\alpha_{j[i],k[i]} + \beta_{1j[i]}(\operatorname{age}) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{1j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{wardtype}_{\operatorname{special\ care}}) \\ &\mu_{\beta_{1j}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & \rho_{\alpha_{j}\beta_{1j}} \\ \rho_{\beta_{1j}\alpha_{j}} & \sigma^2_{\beta_{1j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \\ \alpha_{k} &\sim N \left(\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{hospsize}_{\operatorname{medium}}) + \gamma_{2}^{\alpha}(\operatorname{hospsize}_{\operatorname{small}}), \sigma^2_{\alpha_{k}} \right) \text{, for hospital k = 1,} \dots \text{,K} \end{aligned} $$

02:00

glmer(expcon ~ hospsize + age + wardtype + (age|wardid) + (1|hospital), data = nurses, family = binomial(link = "logit"))Model 7

Fit the following model

$$ \scriptsize \begin{aligned} \operatorname{expcon}_{i} &\sim \operatorname{Binomial}(n = 1, \operatorname{prob}_{\operatorname{expcon} = \operatorname{experiment}} = \widehat{P}) \\ \log\left[\frac{\hat{P}}{1 - \hat{P}} \right] &=\alpha_{j[i],k[i]} + \beta_{1j[i],k[i]}(\operatorname{age}) + \beta_{2}(\operatorname{gender}_{\operatorname{Male}}) + \beta_{3}(\operatorname{age} \times \operatorname{gender}_{\operatorname{Male}}) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{1j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{wardtype}_{\operatorname{special\ care}}) \\ &\mu_{\beta_{1j}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & \rho_{\alpha_{j}\beta_{1j}} \\ \rho_{\beta_{1j}\alpha_{j}} & \sigma^2_{\beta_{1j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{k} \\ &\beta_{1k} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{hospsize}_{\operatorname{medium}}) + \gamma_{2}^{\alpha}(\operatorname{hospsize}_{\operatorname{small}}) \\ &\mu_{\beta_{1k}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{k}} & \rho_{\alpha_{k}\beta_{1k}} \\ \rho_{\beta_{1k}\alpha_{k}} & \sigma^2_{\beta_{1k}} \end{array} \right) \right) \text{, for hospital k = 1,} \dots \text{,K} \end{aligned} $$

02:00

Model 7

Fit the following model

$$ \scriptsize \begin{aligned} \operatorname{expcon}_{i} &\sim \operatorname{Binomial}(n = 1, \operatorname{prob}_{\operatorname{expcon} = \operatorname{experiment}} = \widehat{P}) \\ \log\left[\frac{\hat{P}}{1 - \hat{P}} \right] &=\alpha_{j[i],k[i]} + \beta_{1j[i],k[i]}(\operatorname{age}) + \beta_{2}(\operatorname{gender}_{\operatorname{Male}}) + \beta_{3}(\operatorname{age} \times \operatorname{gender}_{\operatorname{Male}}) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{1j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{wardtype}_{\operatorname{special\ care}}) \\ &\mu_{\beta_{1j}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & \rho_{\alpha_{j}\beta_{1j}} \\ \rho_{\beta_{1j}\alpha_{j}} & \sigma^2_{\beta_{1j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{k} \\ &\beta_{1k} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{hospsize}_{\operatorname{medium}}) + \gamma_{2}^{\alpha}(\operatorname{hospsize}_{\operatorname{small}}) \\ &\mu_{\beta_{1k}} \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{k}} & \rho_{\alpha_{k}\beta_{1k}} \\ \rho_{\beta_{1k}\alpha_{k}} & \sigma^2_{\beta_{1k}} \end{array} \right) \right) \text{, for hospital k = 1,} \dots \text{,K} \end{aligned} $$

02:00

glmer(expcon ~ age * gender + wardtype + hospsize + (age|wardid) + (age|hospital), data = nurses, family = binomial(link = "logit"))Model 8

Fit the following model

$$ \scriptsize \begin{aligned} \operatorname{expcon}_{i} &\sim \operatorname{Binomial}(n = 1, \operatorname{prob}_{\operatorname{expcon} = \operatorname{experiment}} = \widehat{P}) \\ \log\left[\frac{\hat{P}}{1 - \hat{P}} \right] &=\alpha_{j[i],k[i]} + \beta_{1}(\operatorname{age}) + \beta_{2}(\operatorname{gender}_{\operatorname{Male}}) + \beta_{3j[i],k[i]}(\operatorname{experien}) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{3j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{wardtype}_{\operatorname{special\ care}}) \\ &\gamma^{\beta_{3}}_{0} + \gamma^{\beta_{3}}_{1}(\operatorname{wardtype}_{\operatorname{special\ care}}) \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & \rho_{\alpha_{j}\beta_{3j}} \\ \rho_{\beta_{3j}\alpha_{j}} & \sigma^2_{\beta_{3j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{k} \\ &\beta_{3k} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{hospsize}_{\operatorname{medium}}) + \gamma_{2}^{\alpha}(\operatorname{hospsize}_{\operatorname{small}}) \\ &\gamma^{\beta_{3}}_{0} + \gamma^{\beta_{3}}_{1}(\operatorname{hospsize}_{\operatorname{medium}}) + \gamma^{\beta_{3}}_{2}(\operatorname{hospsize}_{\operatorname{small}}) \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{k}} & \rho_{\alpha_{k}\beta_{3k}} \\ \rho_{\beta_{3k}\alpha_{k}} & \sigma^2_{\beta_{3k}} \end{array} \right) \right) \text{, for hospital k = 1,} \dots \text{,K} \end{aligned} $$

02:00

Model 8

Fit the following model

$$ \scriptsize \begin{aligned} \operatorname{expcon}_{i} &\sim \operatorname{Binomial}(n = 1, \operatorname{prob}_{\operatorname{expcon} = \operatorname{experiment}} = \widehat{P}) \\ \log\left[\frac{\hat{P}}{1 - \hat{P}} \right] &=\alpha_{j[i],k[i]} + \beta_{1}(\operatorname{age}) + \beta_{2}(\operatorname{gender}_{\operatorname{Male}}) + \beta_{3j[i],k[i]}(\operatorname{experien}) \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{j} \\ &\beta_{3j} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{wardtype}_{\operatorname{special\ care}}) \\ &\gamma^{\beta_{3}}_{0} + \gamma^{\beta_{3}}_{1}(\operatorname{wardtype}_{\operatorname{special\ care}}) \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{j}} & \rho_{\alpha_{j}\beta_{3j}} \\ \rho_{\beta_{3j}\alpha_{j}} & \sigma^2_{\beta_{3j}} \end{array} \right) \right) \text{, for wardid j = 1,} \dots \text{,J} \\ \left( \begin{array}{c} \begin{aligned} &\alpha_{k} \\ &\beta_{3k} \end{aligned} \end{array} \right) &\sim N \left( \left( \begin{array}{c} \begin{aligned} &\gamma_{0}^{\alpha} + \gamma_{1}^{\alpha}(\operatorname{hospsize}_{\operatorname{medium}}) + \gamma_{2}^{\alpha}(\operatorname{hospsize}_{\operatorname{small}}) \\ &\gamma^{\beta_{3}}_{0} + \gamma^{\beta_{3}}_{1}(\operatorname{hospsize}_{\operatorname{medium}}) + \gamma^{\beta_{3}}_{2}(\operatorname{hospsize}_{\operatorname{small}}) \end{aligned} \end{array} \right) , \left( \begin{array}{cc} \sigma^2_{\alpha_{k}} & \rho_{\alpha_{k}\beta_{3k}} \\ \rho_{\beta_{3k}\alpha_{k}} & \sigma^2_{\beta_{3k}} \end{array} \right) \right) \text{, for hospital k = 1,} \dots \text{,K} \end{aligned} $$

02:00

glmer(expcon ~ age + gender + experien * wardtype + experien * hospsize + (experien|wardid) + (experien|hospital), data = nurses, family = binomial(link = "logit"))The data

Twitter!

Real data, collected Wednesday, but anonymized

You can see the code I used to get the data, but you'll pull different data if you run it

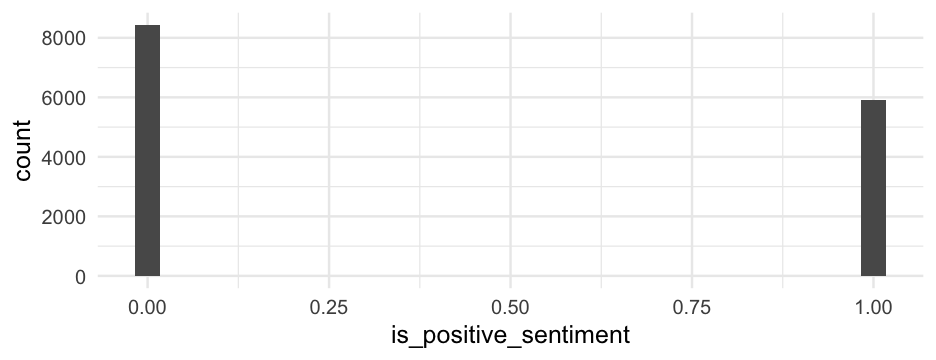

18,000 tweets including the hashtag #blm

Sentence-level text coded for sentiment using the {sentimentr} package, then averaged for the entire tweet

Read in the data

It's a little different because there's still a list column of hashtags. Use code like the following:

library(tidyverse)blm <- read_rds(here::here("data", "blm_sentiment.Rds"))blm## # A tibble: 14,339 x 21## user_id status_id trump_in_description followers_count friends_count listed_count statuses_count favourites_count account_created_at verified## <dbl> <dbl> <lgl> <int> <int> <int> <int> <int> <date> <lgl> ## 1 1691 14339 FALSE 964 859 16 22975 60680 2020-05-24 FALSE ## 2 7740 14338 FALSE 135 389 2 10624 1027 2009-07-25 FALSE ## 3 313 14337 FALSE 261 31 1 154 54 2013-01-06 FALSE ## 4 5740 14336 FALSE 4032 3872 23 23101 57150 2014-08-22 FALSE ## 5 5740 2927 FALSE 4032 3872 23 23101 57150 2014-08-22 FALSE ## 6 5740 9379 FALSE 4032 3872 23 23101 57150 2014-08-22 FALSE ## 7 5740 2457 FALSE 4032 3872 23 23101 57150 2014-08-22 FALSE ## 8 5740 7854 FALSE 4032 3872 23 23101 57150 2014-08-22 FALSE ## 9 5740 1550 FALSE 4032 3872 23 23101 57150 2014-08-22 FALSE ## 10 5740 11789 FALSE 4032 3872 23 23101 57150 2014-08-22 FALSE ## # … with 14,329 more rows, and 11 more variables: tweet_created_at <dttm>, word_count <int>, is_reply <lgl>, is_quote <lgl>, has_url <lgl>, has_photo <lgl>,## # n_mentions <int>, hashtags <list>, favorite_count <int>, retweet_count <int>, is_positive_sentiment <int>Getting more info

This is a data frame like any other with one exception - the hashtags column is a list.

See all hashtags

blm %>% unnest(hashtags) %>% count(hashtags, sort = TRUE) # %>%## # A tibble: 12,011 x 2## hashtags n## <chr> <int>## 1 BLM 12209## 2 blm 2231## 3 BlackLivesMatter 2153## 4 GeorgeFloyd 1473## 5 SashaJohnson 559## 6 racism 416## 7 blacklivesmatter 410## 8 LGBTQ 360## 9 FBR 291## 10 Resist 289## # … with 12,001 more rows# View()List column

We can unnest() to see all of them, but we can't use that in modeling

Pull more features

Let's get the number of hashtags in the tweet

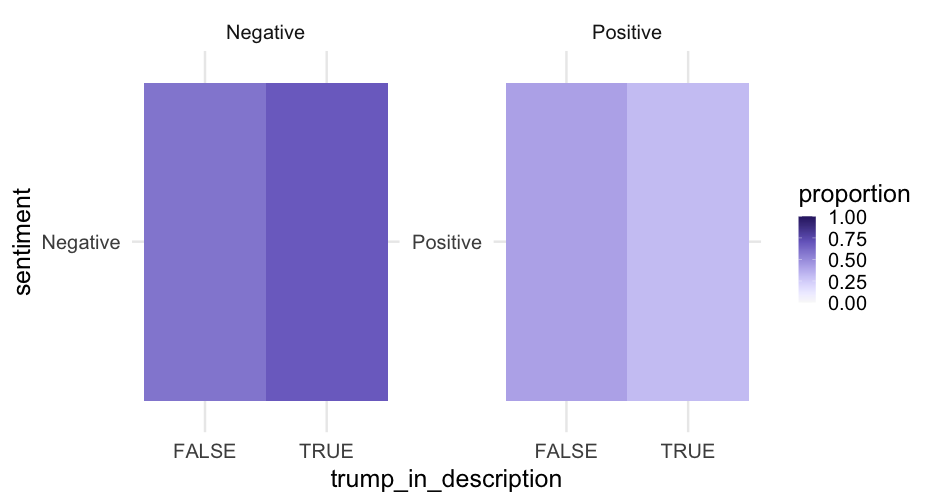

blm <- blm %>% rowwise() %>% mutate(n_hashtags = length(hashtags)) %>% ungroup() blm %>% select(user_id, n_hashtags)## # A tibble: 14,339 x 2## user_id n_hashtags## <dbl> <int>## 1 1691 1## 2 7740 1## 3 313 16## 4 5740 5## 5 5740 5## 6 5740 7## 7 5740 5## 8 5740 7## 9 5740 7## 10 5740 5## # … with 14,329 more rowsWhat about trump_in_description?

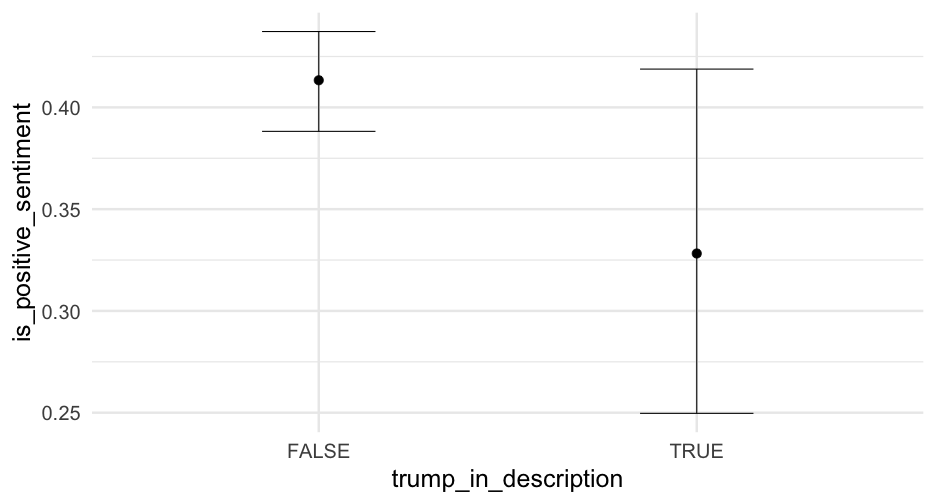

trump_proportions <- blm %>% mutate(sentiment = ifelse( is_positive_sentiment > 0, "Positive", "Negative" ) ) %>% count(trump_in_description, sentiment) %>% group_by(trump_in_description) %>% mutate(proportion = n/sum(n))trump_proportions## # A tibble: 4 x 4## # Groups: trump_in_description [2]## trump_in_description sentiment n proportion## <lgl> <chr> <int> <dbl>## 1 FALSE Negative 8130 0.5848921## 2 FALSE Positive 5770 0.4151079## 3 TRUE Negative 301 0.6856492## 4 TRUE Positive 138 0.3143508Account creation

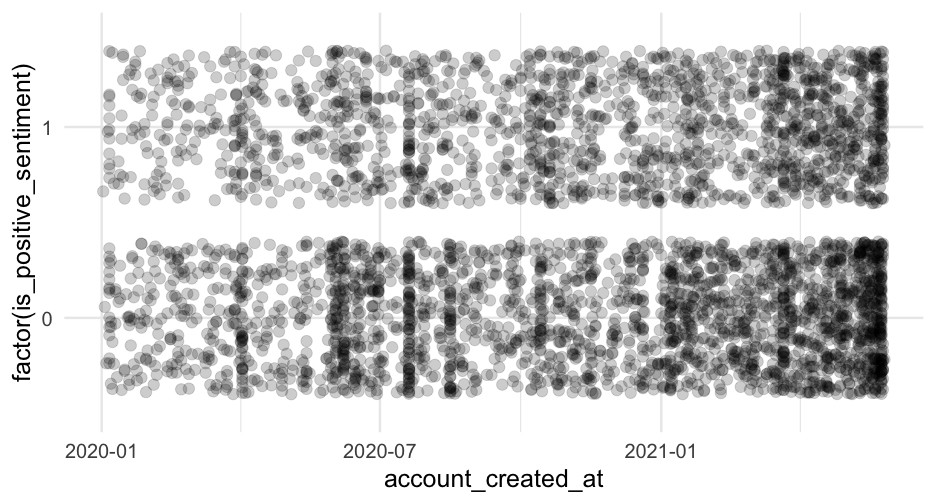

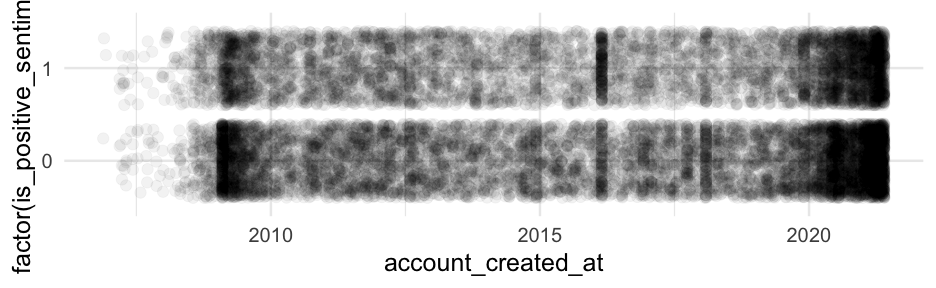

In reality I would probably explore my data for a bit longer, unless I already knew a lot about out. For now, let's just do one more, looking at the relation between when their account was created, and whether the sentiment was positive.

ggplot(blm, aes(account_created_at, factor(is_positive_sentiment))) + geom_jitter(width = 0, alpha = 0.05)

Last bit

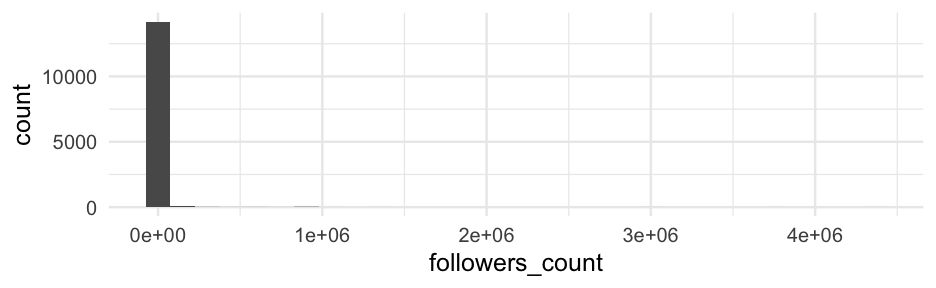

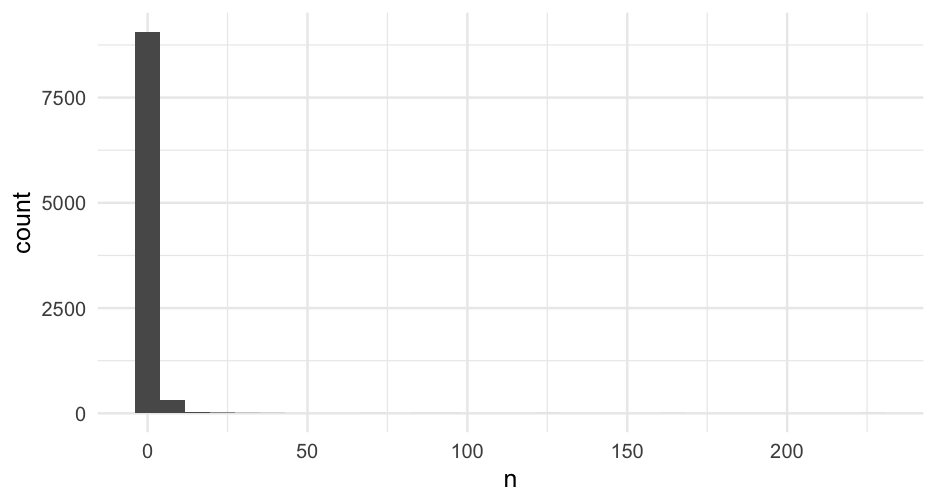

Sample size issues

The number of tweets per person varies a lot.

When \(n = 1\) it's not theoretically a problem, although I've had issues with estimation in the past.

You might consider including the number of tweets a person as a predictor.

- Could be an indicator they are a bot or a journalist.

See here for more information

Maximum likelihood version

library(lme4)m0_ml <- glmer(is_positive_sentiment ~ 1 + (1|user_id), data = blm, family = binomial(link = "logit"))arm::display(m0_ml)## glmer(formula = is_positive_sentiment ~ 1 + (1 | user_id), data = blm, ## family = binomial(link = "logit"))## coef.est coef.se ## -0.40 0.02 ## ## Error terms:## Groups Name Std.Dev.## user_id (Intercept) 0.95 ## Residual 1.00 ## ---## number of obs: 14339, groups: user_id, 9454## AIC = 18757.7, DIC = 10514.1## deviance = 14633.9Variability

The log-odds varied between people with a standard deviation of 0.95.

The probability of a person one standard deviation above and below the average posting a positive tweet were estimated at:

# Probability for an individual 1 SD belowbrms::inv_logit_scaled(-0.40 - 0.95)## [1] 0.2058704# Probability for an individual 1 SD abovebrms::inv_logit_scaled(-0.40 + 0.95)## [1] 0.6341356Plot the variability

First pull the random effect estimates (deviations from the fixed effect)

library(broom.mixed)tidy_m0_ml <- tidy(m0_ml, "ran_vals", conf.int = TRUE) %>% mutate(level = fct_reorder(level, estimate))Next create the plot

ggplot(tidy_m0_ml, aes(estimate, level)) + geom_linerange(aes(xmin = conf.low, xmax = conf.high), alpha = 0.01) + geom_point(color = "#1DA1F2") + # get rid of some plot elements theme(axis.text.y = element_blank(), axis.title.y = element_blank(), panel.grid.major.y = element_blank(), panel.grid.minor = element_blank())Fit using Bayes

You try first. Fit the same model using Bayes. Go ahead and assume flat priors.

04:00

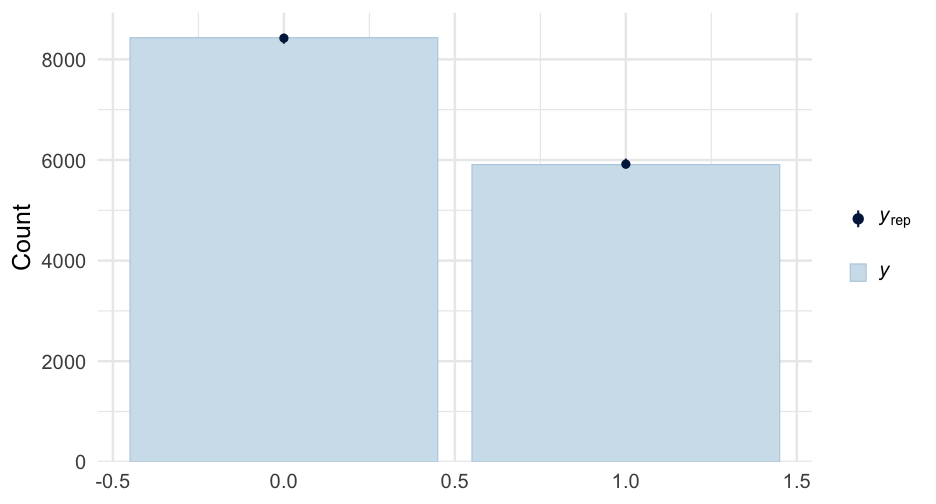

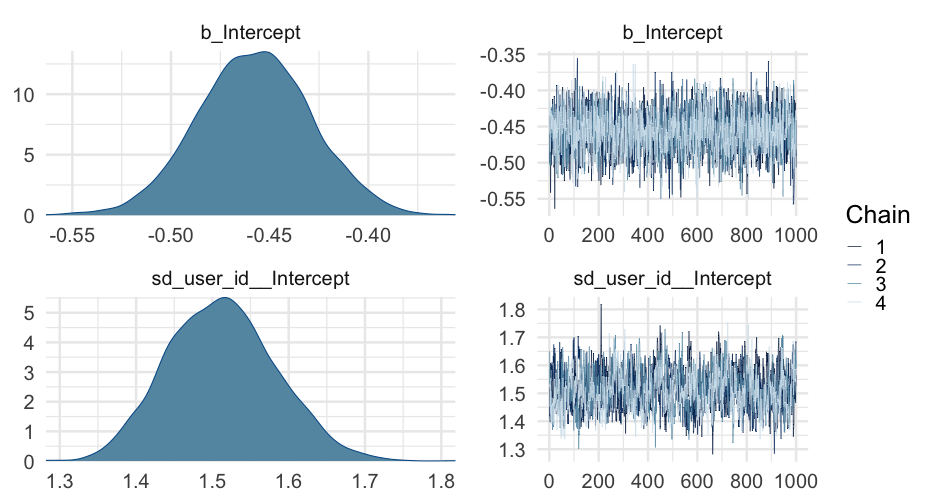

library(brms)m0_b <- brm(is_positive_sentiment ~ 1 + (1|user_id), data = blm, family = bernoulli(link = "logit"), cores = 4, backend = "cmdstanr")## -\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/Running MCMC with 4 parallel chains...## ## Chain 1 Iteration: 1 / 2000 [ 0%] (Warmup) ## Chain 2 Iteration: 1 / 2000 [ 0%] (Warmup) ## Chain 3 Iteration: 1 / 2000 [ 0%] (Warmup) ## Chain 4 Iteration: 1 / 2000 [ 0%] (Warmup) ## Chain 2 Iteration: 100 / 2000 [ 5%] (Warmup) ## Chain 4 Iteration: 100 / 2000 [ 5%] (Warmup) ## Chain 1 Iteration: 100 / 2000 [ 5%] (Warmup) ## Chain 3 Iteration: 100 / 2000 [ 5%] (Warmup) ## Chain 2 Iteration: 200 / 2000 [ 10%] (Warmup) ## Chain 4 Iteration: 200 / 2000 [ 10%] (Warmup) ## Chain 1 Iteration: 200 / 2000 [ 10%] (Warmup) ## Chain 3 Iteration: 200 / 2000 [ 10%] (Warmup) ## Chain 2 Iteration: 300 / 2000 [ 15%] (Warmup) ## Chain 1 Iteration: 300 / 2000 [ 15%] (Warmup) ## Chain 4 Iteration: 300 / 2000 [ 15%] (Warmup) ## Chain 3 Iteration: 300 / 2000 [ 15%] (Warmup) ## Chain 2 Iteration: 400 / 2000 [ 20%] (Warmup) ## Chain 1 Iteration: 400 / 2000 [ 20%] (Warmup) ## Chain 4 Iteration: 400 / 2000 [ 20%] (Warmup) ## Chain 3 Iteration: 400 / 2000 [ 20%] (Warmup) ## Chain 2 Iteration: 500 / 2000 [ 25%] (Warmup) ## Chain 1 Iteration: 500 / 2000 [ 25%] (Warmup) ## Chain 4 Iteration: 500 / 2000 [ 25%] (Warmup) ## Chain 3 Iteration: 500 / 2000 [ 25%] (Warmup) ## Chain 2 Iteration: 600 / 2000 [ 30%] (Warmup) ## Chain 1 Iteration: 600 / 2000 [ 30%] (Warmup) ## Chain 4 Iteration: 600 / 2000 [ 30%] (Warmup) ## Chain 3 Iteration: 600 / 2000 [ 30%] (Warmup) ## Chain 2 Iteration: 700 / 2000 [ 35%] (Warmup) ## Chain 1 Iteration: 700 / 2000 [ 35%] (Warmup) ## Chain 4 Iteration: 700 / 2000 [ 35%] (Warmup) ## Chain 3 Iteration: 700 / 2000 [ 35%] (Warmup) ## Chain 2 Iteration: 800 / 2000 [ 40%] (Warmup) ## Chain 1 Iteration: 800 / 2000 [ 40%] (Warmup) ## Chain 4 Iteration: 800 / 2000 [ 40%] (Warmup) ## Chain 3 Iteration: 800 / 2000 [ 40%] (Warmup) ## Chain 2 Iteration: 900 / 2000 [ 45%] (Warmup) ## Chain 1 Iteration: 900 / 2000 [ 45%] (Warmup) ## Chain 4 Iteration: 900 / 2000 [ 45%] (Warmup) ## Chain 3 Iteration: 900 / 2000 [ 45%] (Warmup) ## Chain 2 Iteration: 1000 / 2000 [ 50%] (Warmup) ## Chain 2 Iteration: 1001 / 2000 [ 50%] (Sampling) ## Chain 1 Iteration: 1000 / 2000 [ 50%] (Warmup) ## Chain 1 Iteration: 1001 / 2000 [ 50%] (Sampling) ## Chain 4 Iteration: 1000 / 2000 [ 50%] (Warmup) ## Chain 4 Iteration: 1001 / 2000 [ 50%] (Sampling) ## Chain 3 Iteration: 1000 / 2000 [ 50%] (Warmup) ## Chain 3 Iteration: 1001 / 2000 [ 50%] (Sampling) ## Chain 2 Iteration: 1100 / 2000 [ 55%] (Sampling) ## Chain 1 Iteration: 1100 / 2000 [ 55%] (Sampling) ## Chain 4 Iteration: 1100 / 2000 [ 55%] (Sampling) ## Chain 3 Iteration: 1100 / 2000 [ 55%] (Sampling) ## Chain 2 Iteration: 1200 / 2000 [ 60%] (Sampling) ## Chain 1 Iteration: 1200 / 2000 [ 60%] (Sampling) ## Chain 4 Iteration: 1200 / 2000 [ 60%] (Sampling) ## Chain 3 Iteration: 1200 / 2000 [ 60%] (Sampling) ## Chain 2 Iteration: 1300 / 2000 [ 65%] (Sampling) ## Chain 1 Iteration: 1300 / 2000 [ 65%] (Sampling) ## Chain 4 Iteration: 1300 / 2000 [ 65%] (Sampling) ## Chain 3 Iteration: 1300 / 2000 [ 65%] (Sampling) ## Chain 2 Iteration: 1400 / 2000 [ 70%] (Sampling) ## Chain 1 Iteration: 1400 / 2000 [ 70%] (Sampling) ## Chain 4 Iteration: 1400 / 2000 [ 70%] (Sampling) ## Chain 3 Iteration: 1400 / 2000 [ 70%] (Sampling) ## Chain 2 Iteration: 1500 / 2000 [ 75%] (Sampling) ## Chain 1 Iteration: 1500 / 2000 [ 75%] (Sampling) ## Chain 4 Iteration: 1500 / 2000 [ 75%] (Sampling) ## Chain 3 Iteration: 1500 / 2000 [ 75%] (Sampling) ## Chain 2 Iteration: 1600 / 2000 [ 80%] (Sampling) ## Chain 1 Iteration: 1600 / 2000 [ 80%] (Sampling) ## Chain 4 Iteration: 1600 / 2000 [ 80%] (Sampling) ## Chain 3 Iteration: 1600 / 2000 [ 80%] (Sampling) ## Chain 2 Iteration: 1700 / 2000 [ 85%] (Sampling) ## Chain 1 Iteration: 1700 / 2000 [ 85%] (Sampling) ## Chain 4 Iteration: 1700 / 2000 [ 85%] (Sampling) ## Chain 3 Iteration: 1700 / 2000 [ 85%] (Sampling) ## Chain 2 Iteration: 1800 / 2000 [ 90%] (Sampling) ## Chain 1 Iteration: 1800 / 2000 [ 90%] (Sampling) ## Chain 4 Iteration: 1800 / 2000 [ 90%] (Sampling) ## Chain 3 Iteration: 1800 / 2000 [ 90%] (Sampling) ## Chain 2 Iteration: 1900 / 2000 [ 95%] (Sampling) ## Chain 1 Iteration: 1900 / 2000 [ 95%] (Sampling) ## Chain 4 Iteration: 1900 / 2000 [ 95%] (Sampling) ## Chain 3 Iteration: 1900 / 2000 [ 95%] (Sampling) ## Chain 2 Iteration: 2000 / 2000 [100%] (Sampling) ## Chain 2 finished in 112.4 seconds.## Chain 1 Iteration: 2000 / 2000 [100%] (Sampling) ## Chain 1 finished in 113.4 seconds.## Chain 4 Iteration: 2000 / 2000 [100%] (Sampling) ## Chain 4 finished in 114.1 seconds.## Chain 3 Iteration: 2000 / 2000 [100%] (Sampling) ## Chain 3 finished in 115.0 seconds.## ## All 4 chains finished successfully.## Mean chain execution time: 113.7 seconds.## Total execution time: 115.4 seconds.Summary

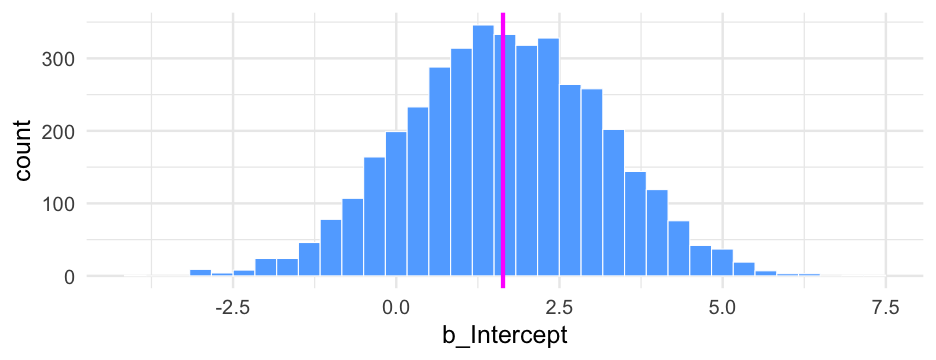

Notice the variance is fairly different here

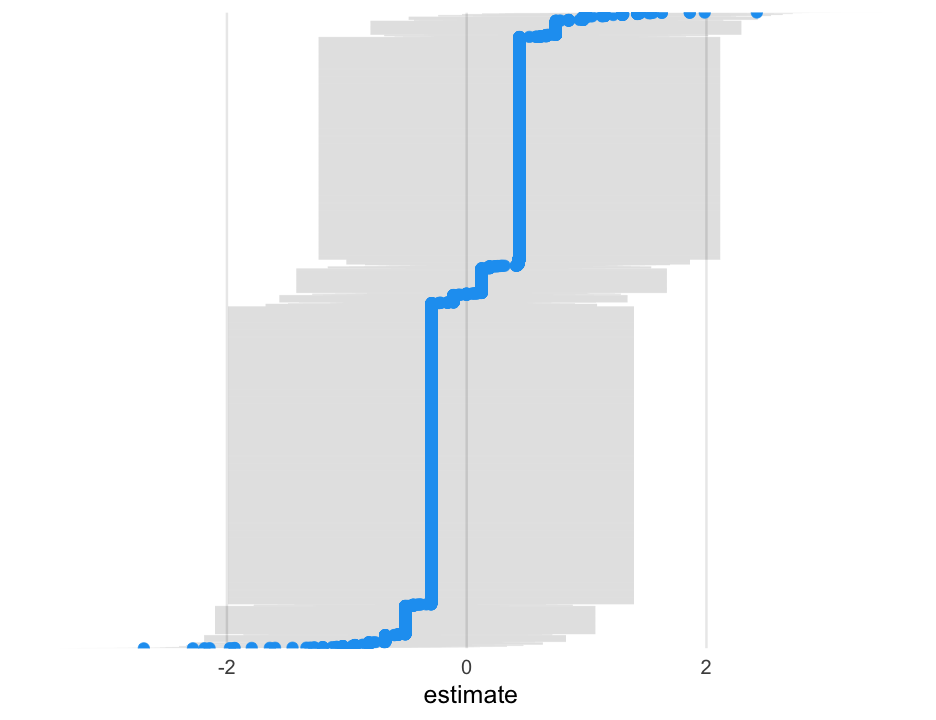

summary(m0_b)## Family: bernoulli ## Links: mu = logit ## Formula: is_positive_sentiment ~ 1 + (1 | user_id) ## Data: blm (Number of observations: 14339) ## Samples: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;## total post-warmup samples = 4000## ## Group-Level Effects: ## ~user_id (Number of levels: 9454) ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS## sd(Intercept) 1.51 0.07 1.38 1.65 1.01 528 1224## ## Population-Level Effects: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS## Intercept -0.46 0.03 -0.51 -0.40 1.00 2643 2906## ## Samples were drawn using sample(hmc). For each parameter, Bulk_ESS## and Tail_ESS are effective sample size measures, and Rhat is the potential## scale reduction factor on split chains (at convergence, Rhat = 1).Plot person-estimates

We have to go to {tidybayes} for this

General purpose tool to pull lots of different things from our model and plot them

For now, we'll do the plotting ourselves

Let's start by looking at what's actually in the model

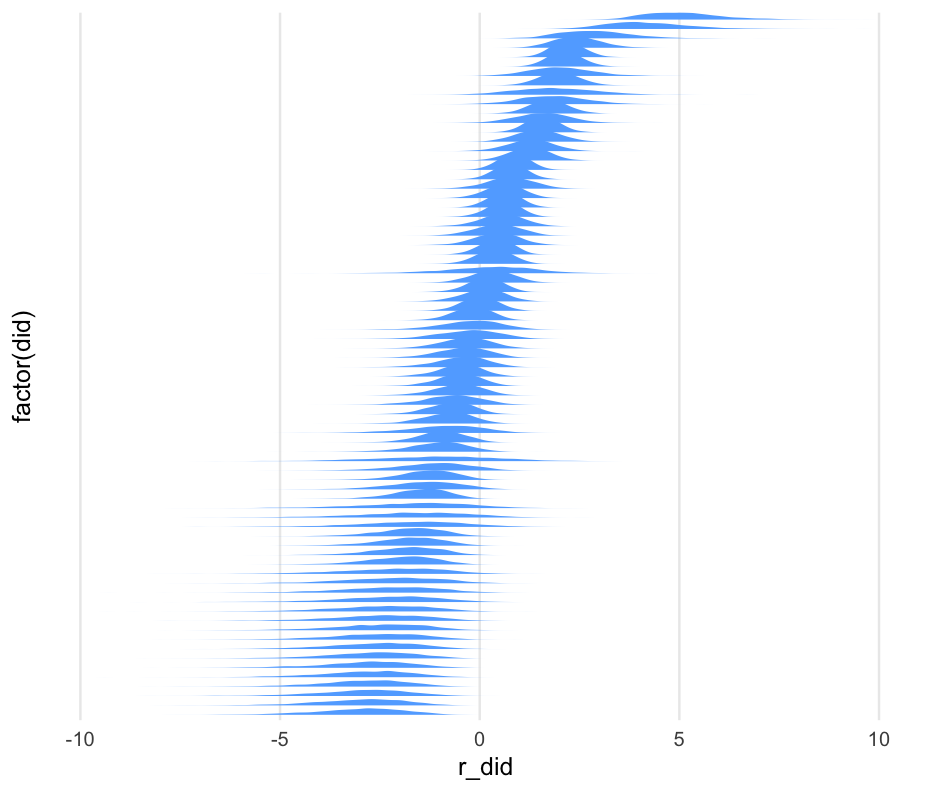

In this case r_* implies "random". These are the deviations from the average.

library(tidybayes)get_variables(m0_b)## [1] "b_Intercept" "sd_user_id__Intercept" "Intercept" "r_user_id[1,Intercept]" "r_user_id[2,Intercept]" ## [6] "r_user_id[3,Intercept]" "r_user_id[4,Intercept]" "r_user_id[5,Intercept]" "r_user_id[6,Intercept]" "r_user_id[7,Intercept]" ## [11] "r_user_id[8,Intercept]" "r_user_id[9,Intercept]" "r_user_id[10,Intercept]" "r_user_id[11,Intercept]" "r_user_id[12,Intercept]" ## [16] "r_user_id[13,Intercept]" "r_user_id[14,Intercept]" "r_user_id[15,Intercept]" "r_user_id[16,Intercept]" "r_user_id[17,Intercept]" ## [21] "r_user_id[18,Intercept]" "r_user_id[19,Intercept]" "r_user_id[20,Intercept]" "r_user_id[21,Intercept]" "r_user_id[22,Intercept]" ## [26] "r_user_id[23,Intercept]" "r_user_id[24,Intercept]" "r_user_id[25,Intercept]" "r_user_id[26,Intercept]" "r_user_id[27,Intercept]" ## [31] "r_user_id[28,Intercept]" "r_user_id[29,Intercept]" "r_user_id[30,Intercept]" "r_user_id[31,Intercept]" "r_user_id[32,Intercept]" ## [36] "r_user_id[33,Intercept]" "r_user_id[34,Intercept]" "r_user_id[35,Intercept]" "r_user_id[36,Intercept]" "r_user_id[37,Intercept]" ## [41] "r_user_id[38,Intercept]" "r_user_id[39,Intercept]" "r_user_id[40,Intercept]" "r_user_id[41,Intercept]" "r_user_id[42,Intercept]" ## [46] "r_user_id[43,Intercept]" "r_user_id[44,Intercept]" "r_user_id[45,Intercept]" "r_user_id[46,Intercept]" "r_user_id[47,Intercept]" ## [51] "r_user_id[48,Intercept]" "r_user_id[49,Intercept]" "r_user_id[50,Intercept]" "r_user_id[51,Intercept]" "r_user_id[52,Intercept]" ## [56] "r_user_id[53,Intercept]" "r_user_id[54,Intercept]" "r_user_id[55,Intercept]" "r_user_id[56,Intercept]" "r_user_id[57,Intercept]" ## [61] "r_user_id[58,Intercept]" "r_user_id[59,Intercept]" "r_user_id[60,Intercept]" "r_user_id[61,Intercept]" "r_user_id[62,Intercept]" ## [66] "r_user_id[63,Intercept]" "r_user_id[64,Intercept]" "r_user_id[65,Intercept]" "r_user_id[66,Intercept]" "r_user_id[67,Intercept]" ## [71] "r_user_id[68,Intercept]" "r_user_id[69,Intercept]" "r_user_id[70,Intercept]" "r_user_id[71,Intercept]" "r_user_id[72,Intercept]" ## [76] "r_user_id[73,Intercept]" "r_user_id[74,Intercept]" "r_user_id[75,Intercept]" "r_user_id[76,Intercept]" "r_user_id[77,Intercept]" ## [81] "r_user_id[78,Intercept]" "r_user_id[79,Intercept]" "r_user_id[80,Intercept]" "r_user_id[81,Intercept]" "r_user_id[82,Intercept]" ## [86] "r_user_id[83,Intercept]" "r_user_id[84,Intercept]" "r_user_id[85,Intercept]" "r_user_id[86,Intercept]" "r_user_id[87,Intercept]" ## [91] "r_user_id[88,Intercept]" "r_user_id[89,Intercept]" "r_user_id[90,Intercept]" "r_user_id[91,Intercept]" "r_user_id[92,Intercept]" ## [96] "r_user_id[93,Intercept]" "r_user_id[94,Intercept]" "r_user_id[95,Intercept]" "r_user_id[96,Intercept]" "r_user_id[97,Intercept]" ## [101] "r_user_id[98,Intercept]" "r_user_id[99,Intercept]" "r_user_id[100,Intercept]" "r_user_id[101,Intercept]" "r_user_id[102,Intercept]"## [106] "r_user_id[103,Intercept]" "r_user_id[104,Intercept]" "r_user_id[105,Intercept]" "r_user_id[106,Intercept]" "r_user_id[107,Intercept]"## [111] "r_user_id[108,Intercept]" "r_user_id[109,Intercept]" "r_user_id[110,Intercept]" "r_user_id[111,Intercept]" "r_user_id[112,Intercept]"## [116] "r_user_id[113,Intercept]" "r_user_id[114,Intercept]" "r_user_id[115,Intercept]" "r_user_id[116,Intercept]" "r_user_id[117,Intercept]"## [121] "r_user_id[118,Intercept]" "r_user_id[119,Intercept]" "r_user_id[120,Intercept]" "r_user_id[121,Intercept]" "r_user_id[122,Intercept]"## [126] "r_user_id[123,Intercept]" "r_user_id[124,Intercept]" "r_user_id[125,Intercept]" "r_user_id[126,Intercept]" "r_user_id[127,Intercept]"## [131] "r_user_id[128,Intercept]" "r_user_id[129,Intercept]" "r_user_id[130,Intercept]" "r_user_id[131,Intercept]" "r_user_id[132,Intercept]"## [136] "r_user_id[133,Intercept]" "r_user_id[134,Intercept]" "r_user_id[135,Intercept]" "r_user_id[136,Intercept]" "r_user_id[137,Intercept]"## [141] "r_user_id[138,Intercept]" "r_user_id[139,Intercept]" "r_user_id[140,Intercept]" "r_user_id[141,Intercept]" "r_user_id[142,Intercept]"## [146] "r_user_id[143,Intercept]" "r_user_id[144,Intercept]" "r_user_id[145,Intercept]" "r_user_id[146,Intercept]" "r_user_id[147,Intercept]"## [151] "r_user_id[148,Intercept]" "r_user_id[149,Intercept]" "r_user_id[150,Intercept]" "r_user_id[151,Intercept]" "r_user_id[152,Intercept]"## [156] "r_user_id[153,Intercept]" "r_user_id[154,Intercept]" "r_user_id[155,Intercept]" "r_user_id[156,Intercept]" "r_user_id[157,Intercept]"## [161] "r_user_id[158,Intercept]" "r_user_id[159,Intercept]" "r_user_id[160,Intercept]" "r_user_id[161,Intercept]" "r_user_id[162,Intercept]"## [166] "r_user_id[163,Intercept]" "r_user_id[164,Intercept]" "r_user_id[165,Intercept]" "r_user_id[166,Intercept]" "r_user_id[167,Intercept]"## [171] "r_user_id[168,Intercept]" "r_user_id[169,Intercept]" "r_user_id[170,Intercept]" "r_user_id[171,Intercept]" "r_user_id[172,Intercept]"## [176] "r_user_id[173,Intercept]" "r_user_id[174,Intercept]" "r_user_id[175,Intercept]" "r_user_id[176,Intercept]" "r_user_id[177,Intercept]"## [181] "r_user_id[178,Intercept]" "r_user_id[179,Intercept]" "r_user_id[180,Intercept]" "r_user_id[181,Intercept]" "r_user_id[182,Intercept]"## [186] "r_user_id[183,Intercept]" "r_user_id[184,Intercept]" "r_user_id[185,Intercept]" "r_user_id[186,Intercept]" "r_user_id[187,Intercept]"## [191] "r_user_id[188,Intercept]" "r_user_id[189,Intercept]" "r_user_id[190,Intercept]" "r_user_id[191,Intercept]" "r_user_id[192,Intercept]"## [196] "r_user_id[193,Intercept]" "r_user_id[194,Intercept]" "r_user_id[195,Intercept]" "r_user_id[196,Intercept]" "r_user_id[197,Intercept]"## [201] "r_user_id[198,Intercept]" "r_user_id[199,Intercept]" "r_user_id[200,Intercept]" "r_user_id[201,Intercept]" "r_user_id[202,Intercept]"## [206] "r_user_id[203,Intercept]" "r_user_id[204,Intercept]" "r_user_id[205,Intercept]" "r_user_id[206,Intercept]" "r_user_id[207,Intercept]"## [211] "r_user_id[208,Intercept]" "r_user_id[209,Intercept]" "r_user_id[210,Intercept]" "r_user_id[211,Intercept]" "r_user_id[212,Intercept]"## [216] "r_user_id[213,Intercept]" "r_user_id[214,Intercept]" "r_user_id[215,Intercept]" "r_user_id[216,Intercept]" "r_user_id[217,Intercept]"## [221] "r_user_id[218,Intercept]" "r_user_id[219,Intercept]" "r_user_id[220,Intercept]" "r_user_id[221,Intercept]" "r_user_id[222,Intercept]"## [226] "r_user_id[223,Intercept]" "r_user_id[224,Intercept]" "r_user_id[225,Intercept]" "r_user_id[226,Intercept]" "r_user_id[227,Intercept]"## [231] "r_user_id[228,Intercept]" "r_user_id[229,Intercept]" "r_user_id[230,Intercept]" "r_user_id[231,Intercept]" "r_user_id[232,Intercept]"## [236] "r_user_id[233,Intercept]" "r_user_id[234,Intercept]" "r_user_id[235,Intercept]" "r_user_id[236,Intercept]" "r_user_id[237,Intercept]"## [241] "r_user_id[238,Intercept]" "r_user_id[239,Intercept]" "r_user_id[240,Intercept]" "r_user_id[241,Intercept]" "r_user_id[242,Intercept]"## [246] "r_user_id[243,Intercept]" "r_user_id[244,Intercept]" "r_user_id[245,Intercept]" "r_user_id[246,Intercept]" "r_user_id[247,Intercept]"## [251] "r_user_id[248,Intercept]" "r_user_id[249,Intercept]" "r_user_id[250,Intercept]" "r_user_id[251,Intercept]" "r_user_id[252,Intercept]"## [256] "r_user_id[253,Intercept]" "r_user_id[254,Intercept]" "r_user_id[255,Intercept]" "r_user_id[256,Intercept]" "r_user_id[257,Intercept]"## [261] "r_user_id[258,Intercept]" "r_user_id[259,Intercept]" "r_user_id[260,Intercept]" "r_user_id[261,Intercept]" "r_user_id[262,Intercept]"## [266] "r_user_id[263,Intercept]" "r_user_id[264,Intercept]" "r_user_id[265,Intercept]" "r_user_id[266,Intercept]" "r_user_id[267,Intercept]"## [271] "r_user_id[268,Intercept]" "r_user_id[269,Intercept]" "r_user_id[270,Intercept]" "r_user_id[271,Intercept]" "r_user_id[272,Intercept]"## [276] "r_user_id[273,Intercept]" "r_user_id[274,Intercept]" "r_user_id[275,Intercept]" "r_user_id[276,Intercept]" "r_user_id[277,Intercept]"## [281] "r_user_id[278,Intercept]" "r_user_id[279,Intercept]" "r_user_id[280,Intercept]" "r_user_id[281,Intercept]" "r_user_id[282,Intercept]"## [286] "r_user_id[283,Intercept]" "r_user_id[284,Intercept]" "r_user_id[285,Intercept]" "r_user_id[286,Intercept]" "r_user_id[287,Intercept]"## [291] "r_user_id[288,Intercept]" "r_user_id[289,Intercept]" "r_user_id[290,Intercept]" "r_user_id[291,Intercept]" "r_user_id[292,Intercept]"## [296] "r_user_id[293,Intercept]" "r_user_id[294,Intercept]" "r_user_id[295,Intercept]" "r_user_id[296,Intercept]" "r_user_id[297,Intercept]"## [301] "r_user_id[298,Intercept]" "r_user_id[299,Intercept]" "r_user_id[300,Intercept]" "r_user_id[301,Intercept]" "r_user_id[302,Intercept]"## [306] "r_user_id[303,Intercept]" "r_user_id[304,Intercept]" "r_user_id[305,Intercept]" "r_user_id[306,Intercept]" "r_user_id[307,Intercept]"## [311] "r_user_id[308,Intercept]" "r_user_id[309,Intercept]" "r_user_id[310,Intercept]" "r_user_id[311,Intercept]" "r_user_id[312,Intercept]"## [316] "r_user_id[313,Intercept]" "r_user_id[314,Intercept]" "r_user_id[315,Intercept]" "r_user_id[316,Intercept]" "r_user_id[317,Intercept]"## [321] "r_user_id[318,Intercept]" "r_user_id[319,Intercept]" "r_user_id[320,Intercept]" "r_user_id[321,Intercept]" "r_user_id[322,Intercept]"## [326] "r_user_id[323,Intercept]" "r_user_id[324,Intercept]" "r_user_id[325,Intercept]" "r_user_id[326,Intercept]" "r_user_id[327,Intercept]"## [331] "r_user_id[328,Intercept]" "r_user_id[329,Intercept]" "r_user_id[330,Intercept]" "r_user_id[331,Intercept]" "r_user_id[332,Intercept]"## [336] "r_user_id[333,Intercept]" "r_user_id[334,Intercept]" "r_user_id[335,Intercept]" "r_user_id[336,Intercept]" "r_user_id[337,Intercept]"## [341] "r_user_id[338,Intercept]" "r_user_id[339,Intercept]" "r_user_id[340,Intercept]" "r_user_id[341,Intercept]" "r_user_id[342,Intercept]"## [346] "r_user_id[343,Intercept]" "r_user_id[344,Intercept]" "r_user_id[345,Intercept]" "r_user_id[346,Intercept]" "r_user_id[347,Intercept]"## [351] "r_user_id[348,Intercept]" "r_user_id[349,Intercept]" "r_user_id[350,Intercept]" "r_user_id[351,Intercept]" "r_user_id[352,Intercept]"## [356] "r_user_id[353,Intercept]" "r_user_id[354,Intercept]" "r_user_id[355,Intercept]" "r_user_id[356,Intercept]" "r_user_id[357,Intercept]"## [361] "r_user_id[358,Intercept]" "r_user_id[359,Intercept]" "r_user_id[360,Intercept]" "r_user_id[361,Intercept]" "r_user_id[362,Intercept]"## [366] "r_user_id[363,Intercept]" "r_user_id[364,Intercept]" "r_user_id[365,Intercept]" "r_user_id[366,Intercept]" "r_user_id[367,Intercept]"## [371] "r_user_id[368,Intercept]" "r_user_id[369,Intercept]" "r_user_id[370,Intercept]" "r_user_id[371,Intercept]" "r_user_id[372,Intercept]"## [376] "r_user_id[373,Intercept]" "r_user_id[374,Intercept]" "r_user_id[375,Intercept]" "r_user_id[376,Intercept]" "r_user_id[377,Intercept]"## [381] "r_user_id[378,Intercept]" "r_user_id[379,Intercept]" "r_user_id[380,Intercept]" "r_user_id[381,Intercept]" "r_user_id[382,Intercept]"## [386] "r_user_id[383,Intercept]" "r_user_id[384,Intercept]" "r_user_id[385,Intercept]" "r_user_id[386,Intercept]" "r_user_id[387,Intercept]"## [391] "r_user_id[388,Intercept]" "r_user_id[389,Intercept]" "r_user_id[390,Intercept]" "r_user_id[391,Intercept]" "r_user_id[392,Intercept]"## [396] "r_user_id[393,Intercept]" "r_user_id[394,Intercept]" "r_user_id[395,Intercept]" "r_user_id[396,Intercept]" "r_user_id[397,Intercept]"## [401] "r_user_id[398,Intercept]" "r_user_id[399,Intercept]" "r_user_id[400,Intercept]" "r_user_id[401,Intercept]" "r_user_id[402,Intercept]"## [406] "r_user_id[403,Intercept]" "r_user_id[404,Intercept]" "r_user_id[405,Intercept]" "r_user_id[406,Intercept]" "r_user_id[407,Intercept]"## [411] "r_user_id[408,Intercept]" "r_user_id[409,Intercept]" "r_user_id[410,Intercept]" "r_user_id[411,Intercept]" "r_user_id[412,Intercept]"## [416] "r_user_id[413,Intercept]" "r_user_id[414,Intercept]" "r_user_id[415,Intercept]" "r_user_id[416,Intercept]" "r_user_id[417,Intercept]"## [421] "r_user_id[418,Intercept]" "r_user_id[419,Intercept]" "r_user_id[420,Intercept]" "r_user_id[421,Intercept]" "r_user_id[422,Intercept]"## [426] "r_user_id[423,Intercept]" "r_user_id[424,Intercept]" "r_user_id[425,Intercept]" "r_user_id[426,Intercept]" "r_user_id[427,Intercept]"## [431] "r_user_id[428,Intercept]" "r_user_id[429,Intercept]" "r_user_id[430,Intercept]" "r_user_id[431,Intercept]" "r_user_id[432,Intercept]"## [436] "r_user_id[433,Intercept]" "r_user_id[434,Intercept]" "r_user_id[435,Intercept]" "r_user_id[436,Intercept]" "r_user_id[437,Intercept]"## [441] "r_user_id[438,Intercept]" "r_user_id[439,Intercept]" "r_user_id[440,Intercept]" "r_user_id[441,Intercept]" "r_user_id[442,Intercept]"## [446] "r_user_id[443,Intercept]" "r_user_id[444,Intercept]" "r_user_id[445,Intercept]" "r_user_id[446,Intercept]" "r_user_id[447,Intercept]"## [451] "r_user_id[448,Intercept]" "r_user_id[449,Intercept]" "r_user_id[450,Intercept]" "r_user_id[451,Intercept]" "r_user_id[452,Intercept]"## [456] "r_user_id[453,Intercept]" "r_user_id[454,Intercept]" "r_user_id[455,Intercept]" "r_user_id[456,Intercept]" "r_user_id[457,Intercept]"## [461] "r_user_id[458,Intercept]" "r_user_id[459,Intercept]" "r_user_id[460,Intercept]" "r_user_id[461,Intercept]" "r_user_id[462,Intercept]"## [466] "r_user_id[463,Intercept]" "r_user_id[464,Intercept]" "r_user_id[465,Intercept]" "r_user_id[466,Intercept]" "r_user_id[467,Intercept]"## [471] "r_user_id[468,Intercept]" "r_user_id[469,Intercept]" "r_user_id[470,Intercept]" "r_user_id[471,Intercept]" "r_user_id[472,Intercept]"## [476] "r_user_id[473,Intercept]" "r_user_id[474,Intercept]" "r_user_id[475,Intercept]" "r_user_id[476,Intercept]" "r_user_id[477,Intercept]"## [481] "r_user_id[478,Intercept]" "r_user_id[479,Intercept]" "r_user_id[480,Intercept]" "r_user_id[481,Intercept]" "r_user_id[482,Intercept]"## [486] "r_user_id[483,Intercept]" "r_user_id[484,Intercept]" "r_user_id[485,Intercept]" "r_user_id[486,Intercept]" "r_user_id[487,Intercept]"## [491] "r_user_id[488,Intercept]" "r_user_id[489,Intercept]" "r_user_id[490,Intercept]" "r_user_id[491,Intercept]" "r_user_id[492,Intercept]"## [496] "r_user_id[493,Intercept]" "r_user_id[494,Intercept]" "r_user_id[495,Intercept]" "r_user_id[496,Intercept]" "r_user_id[497,Intercept]"## [501] "r_user_id[498,Intercept]" "r_user_id[499,Intercept]" "r_user_id[500,Intercept]" "r_user_id[501,Intercept]" "r_user_id[502,Intercept]"## [506] "r_user_id[503,Intercept]" "r_user_id[504,Intercept]" "r_user_id[505,Intercept]" "r_user_id[506,Intercept]" "r_user_id[507,Intercept]"## [511] "r_user_id[508,Intercept]" "r_user_id[509,Intercept]" "r_user_id[510,Intercept]" "r_user_id[511,Intercept]" "r_user_id[512,Intercept]"## [516] "r_user_id[513,Intercept]" "r_user_id[514,Intercept]" "r_user_id[515,Intercept]" "r_user_id[516,Intercept]" "r_user_id[517,Intercept]"## [521] "r_user_id[518,Intercept]" "r_user_id[519,Intercept]" "r_user_id[520,Intercept]" "r_user_id[521,Intercept]" "r_user_id[522,Intercept]"## [526] "r_user_id[523,Intercept]" "r_user_id[524,Intercept]" "r_user_id[525,Intercept]" "r_user_id[526,Intercept]" "r_user_id[527,Intercept]"## [531] "r_user_id[528,Intercept]" "r_user_id[529,Intercept]" "r_user_id[530,Intercept]" "r_user_id[531,Intercept]" "r_user_id[532,Intercept]"## [536] "r_user_id[533,Intercept]" "r_user_id[534,Intercept]" "r_user_id[535,Intercept]" "r_user_id[536,Intercept]" "r_user_id[537,Intercept]"## [541] "r_user_id[538,Intercept]" "r_user_id[539,Intercept]" "r_user_id[540,Intercept]" "r_user_id[541,Intercept]" "r_user_id[542,Intercept]"## [546] "r_user_id[543,Intercept]" "r_user_id[544,Intercept]" "r_user_id[545,Intercept]" "r_user_id[546,Intercept]" "r_user_id[547,Intercept]"## [551] "r_user_id[548,Intercept]" "r_user_id[549,Intercept]" "r_user_id[550,Intercept]" "r_user_id[551,Intercept]" "r_user_id[552,Intercept]"## [556] "r_user_id[553,Intercept]" "r_user_id[554,Intercept]" "r_user_id[555,Intercept]" "r_user_id[556,Intercept]" "r_user_id[557,Intercept]"## [561] "r_user_id[558,Intercept]" "r_user_id[559,Intercept]" "r_user_id[560,Intercept]" "r_user_id[561,Intercept]" "r_user_id[562,Intercept]"## [566] "r_user_id[563,Intercept]" "r_user_id[564,Intercept]" "r_user_id[565,Intercept]" "r_user_id[566,Intercept]" "r_user_id[567,Intercept]"## [571] "r_user_id[568,Intercept]" "r_user_id[569,Intercept]" "r_user_id[570,Intercept]" "r_user_id[571,Intercept]" "r_user_id[572,Intercept]"## [576] "r_user_id[573,Intercept]" "r_user_id[574,Intercept]" "r_user_id[575,Intercept]" "r_user_id[576,Intercept]" "r_user_id[577,Intercept]"## [581] "r_user_id[578,Intercept]" "r_user_id[579,Intercept]" "r_user_id[580,Intercept]" "r_user_id[581,Intercept]" "r_user_id[582,Intercept]"## [586] "r_user_id[583,Intercept]" "r_user_id[584,Intercept]" "r_user_id[585,Intercept]" "r_user_id[586,Intercept]" "r_user_id[587,Intercept]"## [591] "r_user_id[588,Intercept]" "r_user_id[589,Intercept]" "r_user_id[590,Intercept]" "r_user_id[591,Intercept]" "r_user_id[592,Intercept]"## [596] "r_user_id[593,Intercept]" "r_user_id[594,Intercept]" "r_user_id[595,Intercept]" "r_user_id[596,Intercept]" "r_user_id[597,Intercept]"## [601] "r_user_id[598,Intercept]" "r_user_id[599,Intercept]" "r_user_id[600,Intercept]" "r_user_id[601,Intercept]" "r_user_id[602,Intercept]"## [606] "r_user_id[603,Intercept]" "r_user_id[604,Intercept]" "r_user_id[605,Intercept]" "r_user_id[606,Intercept]" "r_user_id[607,Intercept]"## [611] "r_user_id[608,Intercept]" "r_user_id[609,Intercept]" "r_user_id[610,Intercept]" "r_user_id[611,Intercept]" "r_user_id[612,Intercept]"## [616] "r_user_id[613,Intercept]" "r_user_id[614,Intercept]" "r_user_id[615,Intercept]" "r_user_id[616,Intercept]" "r_user_id[617,Intercept]"## [621] "r_user_id[618,Intercept]" "r_user_id[619,Intercept]" "r_user_id[620,Intercept]" "r_user_id[621,Intercept]" "r_user_id[622,Intercept]"## [626] "r_user_id[623,Intercept]" "r_user_id[624,Intercept]" "r_user_id[625,Intercept]" "r_user_id[626,Intercept]" "r_user_id[627,Intercept]"## [631] "r_user_id[628,Intercept]" "r_user_id[629,Intercept]" "r_user_id[630,Intercept]" "r_user_id[631,Intercept]" "r_user_id[632,Intercept]"## [636] "r_user_id[633,Intercept]" "r_user_id[634,Intercept]" "r_user_id[635,Intercept]" "r_user_id[636,Intercept]" "r_user_id[637,Intercept]"## [641] "r_user_id[638,Intercept]" "r_user_id[639,Intercept]" "r_user_id[640,Intercept]" "r_user_id[641,Intercept]" "r_user_id[642,Intercept]"## [646] "r_user_id[643,Intercept]" "r_user_id[644,Intercept]" "r_user_id[645,Intercept]" "r_user_id[646,Intercept]" "r_user_id[647,Intercept]"## [651] "r_user_id[648,Intercept]" "r_user_id[649,Intercept]" "r_user_id[650,Intercept]" "r_user_id[651,Intercept]" "r_user_id[652,Intercept]"## [656] "r_user_id[653,Intercept]" "r_user_id[654,Intercept]" "r_user_id[655,Intercept]" "r_user_id[656,Intercept]" "r_user_id[657,Intercept]"## [661] "r_user_id[658,Intercept]" "r_user_id[659,Intercept]" "r_user_id[660,Intercept]" "r_user_id[661,Intercept]" "r_user_id[662,Intercept]"## [666] "r_user_id[663,Intercept]" "r_user_id[664,Intercept]" "r_user_id[665,Intercept]" "r_user_id[666,Intercept]" "r_user_id[667,Intercept]"## [671] "r_user_id[668,Intercept]" "r_user_id[669,Intercept]" "r_user_id[670,Intercept]" "r_user_id[671,Intercept]" "r_user_id[672,Intercept]"## [676] "r_user_id[673,Intercept]" "r_user_id[674,Intercept]" "r_user_id[675,Intercept]" "r_user_id[676,Intercept]" "r_user_id[677,Intercept]"## [681] "r_user_id[678,Intercept]" "r_user_id[679,Intercept]" "r_user_id[680,Intercept]" "r_user_id[681,Intercept]" "r_user_id[682,Intercept]"## [686] "r_user_id[683,Intercept]" "r_user_id[684,Intercept]" "r_user_id[685,Intercept]" "r_user_id[686,Intercept]" "r_user_id[687,Intercept]"## [691] "r_user_id[688,Intercept]" "r_user_id[689,Intercept]" "r_user_id[690,Intercept]" "r_user_id[691,Intercept]" "r_user_id[692,Intercept]"## [696] "r_user_id[693,Intercept]" "r_user_id[694,Intercept]" "r_user_id[695,Intercept]" "r_user_id[696,Intercept]" "r_user_id[697,Intercept]"## [701] "r_user_id[698,Intercept]" "r_user_id[699,Intercept]" "r_user_id[700,Intercept]" "r_user_id[701,Intercept]" "r_user_id[702,Intercept]"## [706] "r_user_id[703,Intercept]" "r_user_id[704,Intercept]" "r_user_id[705,Intercept]" "r_user_id[706,Intercept]" "r_user_id[707,Intercept]"## [711] "r_user_id[708,Intercept]" "r_user_id[709,Intercept]" "r_user_id[710,Intercept]" "r_user_id[711,Intercept]" "r_user_id[712,Intercept]"## [716] "r_user_id[713,Intercept]" "r_user_id[714,Intercept]" "r_user_id[715,Intercept]" "r_user_id[716,Intercept]" "r_user_id[717,Intercept]"## [721] "r_user_id[718,Intercept]" "r_user_id[719,Intercept]" "r_user_id[720,Intercept]" "r_user_id[721,Intercept]" "r_user_id[722,Intercept]"## [726] "r_user_id[723,Intercept]" "r_user_id[724,Intercept]" "r_user_id[725,Intercept]" "r_user_id[726,Intercept]" "r_user_id[727,Intercept]"## [731] "r_user_id[728,Intercept]" "r_user_id[729,Intercept]" "r_user_id[730,Intercept]" "r_user_id[731,Intercept]" "r_user_id[732,Intercept]"## [736] "r_user_id[733,Intercept]" "r_user_id[734,Intercept]" "r_user_id[735,Intercept]" "r_user_id[736,Intercept]" "r_user_id[737,Intercept]"## [741] "r_user_id[738,Intercept]" "r_user_id[739,Intercept]" "r_user_id[740,Intercept]" "r_user_id[741,Intercept]" "r_user_id[742,Intercept]"## [746] "r_user_id[743,Intercept]" "r_user_id[744,Intercept]" "r_user_id[745,Intercept]" "r_user_id[746,Intercept]" "r_user_id[747,Intercept]"## [751] "r_user_id[748,Intercept]" "r_user_id[749,Intercept]" "r_user_id[750,Intercept]" "r_user_id[751,Intercept]" "r_user_id[752,Intercept]"## [756] "r_user_id[753,Intercept]" "r_user_id[754,Intercept]" "r_user_id[755,Intercept]" "r_user_id[756,Intercept]" "r_user_id[757,Intercept]"## [761] "r_user_id[758,Intercept]" "r_user_id[759,Intercept]" "r_user_id[760,Intercept]" "r_user_id[761,Intercept]" "r_user_id[762,Intercept]"## [766] "r_user_id[763,Intercept]" "r_user_id[764,Intercept]" "r_user_id[765,Intercept]" "r_user_id[766,Intercept]" "r_user_id[767,Intercept]"## [771] "r_user_id[768,Intercept]" "r_user_id[769,Intercept]" "r_user_id[770,Intercept]" "r_user_id[771,Intercept]" "r_user_id[772,Intercept]"## [776] "r_user_id[773,Intercept]" "r_user_id[774,Intercept]" "r_user_id[775,Intercept]" "r_user_id[776,Intercept]" "r_user_id[777,Intercept]"## [781] "r_user_id[778,Intercept]" "r_user_id[779,Intercept]" "r_user_id[780,Intercept]" "r_user_id[781,Intercept]" "r_user_id[782,Intercept]"## [786] "r_user_id[783,Intercept]" "r_user_id[784,Intercept]" "r_user_id[785,Intercept]" "r_user_id[786,Intercept]" "r_user_id[787,Intercept]"## [791] "r_user_id[788,Intercept]" "r_user_id[789,Intercept]" "r_user_id[790,Intercept]" "r_user_id[791,Intercept]" "r_user_id[792,Intercept]"## [796] "r_user_id[793,Intercept]" "r_user_id[794,Intercept]" "r_user_id[795,Intercept]" "r_user_id[796,Intercept]" "r_user_id[797,Intercept]"## [801] "r_user_id[798,Intercept]" "r_user_id[799,Intercept]" "r_user_id[800,Intercept]" "r_user_id[801,Intercept]" "r_user_id[802,Intercept]"## [806] "r_user_id[803,Intercept]" "r_user_id[804,Intercept]" "r_user_id[805,Intercept]" "r_user_id[806,Intercept]" "r_user_id[807,Intercept]"## [811] "r_user_id[808,Intercept]" "r_user_id[809,Intercept]" "r_user_id[810,Intercept]" "r_user_id[811,Intercept]" "r_user_id[812,Intercept]"## [816] "r_user_id[813,Intercept]" "r_user_id[814,Intercept]" "r_user_id[815,Intercept]" "r_user_id[816,Intercept]" "r_user_id[817,Intercept]"## [821] "r_user_id[818,Intercept]" "r_user_id[819,Intercept]" "r_user_id[820,Intercept]" "r_user_id[821,Intercept]" "r_user_id[822,Intercept]"## [826] "r_user_id[823,Intercept]" "r_user_id[824,Intercept]" "r_user_id[825,Intercept]" "r_user_id[826,Intercept]" "r_user_id[827,Intercept]"## [831] "r_user_id[828,Intercept]" "r_user_id[829,Intercept]" "r_user_id[830,Intercept]" "r_user_id[831,Intercept]" "r_user_id[832,Intercept]"## [836] "r_user_id[833,Intercept]" "r_user_id[834,Intercept]" "r_user_id[835,Intercept]" "r_user_id[836,Intercept]" "r_user_id[837,Intercept]"## [841] "r_user_id[838,Intercept]" "r_user_id[839,Intercept]" "r_user_id[840,Intercept]" "r_user_id[841,Intercept]" "r_user_id[842,Intercept]"## [846] "r_user_id[843,Intercept]" "r_user_id[844,Intercept]" "r_user_id[845,Intercept]" "r_user_id[846,Intercept]" "r_user_id[847,Intercept]"## [851] "r_user_id[848,Intercept]" "r_user_id[849,Intercept]" "r_user_id[850,Intercept]" "r_user_id[851,Intercept]" "r_user_id[852,Intercept]"## [856] "r_user_id[853,Intercept]" "r_user_id[854,Intercept]" "r_user_id[855,Intercept]" "r_user_id[856,Intercept]" "r_user_id[857,Intercept]"## [861] "r_user_id[858,Intercept]" "r_user_id[859,Intercept]" "r_user_id[860,Intercept]" "r_user_id[861,Intercept]" "r_user_id[862,Intercept]"## [866] "r_user_id[863,Intercept]" "r_user_id[864,Intercept]" "r_user_id[865,Intercept]" "r_user_id[866,Intercept]" "r_user_id[867,Intercept]"## [871] "r_user_id[868,Intercept]" "r_user_id[869,Intercept]" "r_user_id[870,Intercept]" "r_user_id[871,Intercept]" "r_user_id[872,Intercept]"## [876] "r_user_id[873,Intercept]" "r_user_id[874,Intercept]" "r_user_id[875,Intercept]" "r_user_id[876,Intercept]" "r_user_id[877,Intercept]"## [881] "r_user_id[878,Intercept]" "r_user_id[879,Intercept]" "r_user_id[880,Intercept]" "r_user_id[881,Intercept]" "r_user_id[882,Intercept]"## [886] "r_user_id[883,Intercept]" "r_user_id[884,Intercept]" "r_user_id[885,Intercept]" "r_user_id[886,Intercept]" "r_user_id[887,Intercept]"## [891] "r_user_id[888,Intercept]" "r_user_id[889,Intercept]" "r_user_id[890,Intercept]" "r_user_id[891,Intercept]" "r_user_id[892,Intercept]"## [896] "r_user_id[893,Intercept]" "r_user_id[894,Intercept]" "r_user_id[895,Intercept]" "r_user_id[896,Intercept]" "r_user_id[897,Intercept]"## [901] "r_user_id[898,Intercept]" "r_user_id[899,Intercept]" "r_user_id[900,Intercept]" "r_user_id[901,Intercept]" "r_user_id[902,Intercept]"## [906] "r_user_id[903,Intercept]" "r_user_id[904,Intercept]" "r_user_id[905,Intercept]" "r_user_id[906,Intercept]" "r_user_id[907,Intercept]"## [911] "r_user_id[908,Intercept]" "r_user_id[909,Intercept]" "r_user_id[910,Intercept]" "r_user_id[911,Intercept]" "r_user_id[912,Intercept]"## [916] "r_user_id[913,Intercept]" "r_user_id[914,Intercept]" "r_user_id[915,Intercept]" "r_user_id[916,Intercept]" "r_user_id[917,Intercept]"## [921] "r_user_id[918,Intercept]" "r_user_id[919,Intercept]" "r_user_id[920,Intercept]" "r_user_id[921,Intercept]" "r_user_id[922,Intercept]"## [926] "r_user_id[923,Intercept]" "r_user_id[924,Intercept]" "r_user_id[925,Intercept]" "r_user_id[926,Intercept]" "r_user_id[927,Intercept]"## [931] "r_user_id[928,Intercept]" "r_user_id[929,Intercept]" "r_user_id[930,Intercept]" "r_user_id[931,Intercept]" "r_user_id[932,Intercept]"## [936] "r_user_id[933,Intercept]" "r_user_id[934,Intercept]" "r_user_id[935,Intercept]" "r_user_id[936,Intercept]" "r_user_id[937,Intercept]"## [941] "r_user_id[938,Intercept]" "r_user_id[939,Intercept]" "r_user_id[940,Intercept]" "r_user_id[941,Intercept]" "r_user_id[942,Intercept]"## [946] "r_user_id[943,Intercept]" "r_user_id[944,Intercept]" "r_user_id[945,Intercept]" "r_user_id[946,Intercept]" "r_user_id[947,Intercept]"## [951] "r_user_id[948,Intercept]" "r_user_id[949,Intercept]" "r_user_id[950,Intercept]" "r_user_id[951,Intercept]" "r_user_id[952,Intercept]"## [956] "r_user_id[953,Intercept]" "r_user_id[954,Intercept]" "r_user_id[955,Intercept]" "r_user_id[956,Intercept]" "r_user_id[957,Intercept]"## [961] "r_user_id[958,Intercept]" "r_user_id[959,Intercept]" "r_user_id[960,Intercept]" "r_user_id[961,Intercept]" "r_user_id[962,Intercept]"## [966] "r_user_id[963,Intercept]" "r_user_id[964,Intercept]" "r_user_id[965,Intercept]" "r_user_id[966,Intercept]" "r_user_id[967,Intercept]"## [971] "r_user_id[968,Intercept]" "r_user_id[969,Intercept]" "r_user_id[970,Intercept]" "r_user_id[971,Intercept]" "r_user_id[972,Intercept]"## [976] "r_user_id[973,Intercept]" "r_user_id[974,Intercept]" "r_user_id[975,Intercept]" "r_user_id[976,Intercept]" "r_user_id[977,Intercept]"## [981] "r_user_id[978,Intercept]" "r_user_id[979,Intercept]" "r_user_id[980,Intercept]" "r_user_id[981,Intercept]" "r_user_id[982,Intercept]"## [986] "r_user_id[983,Intercept]" "r_user_id[984,Intercept]" "r_user_id[985,Intercept]" "r_user_id[986,Intercept]" "r_user_id[987,Intercept]"## [991] "r_user_id[988,Intercept]" "r_user_id[989,Intercept]" "r_user_id[990,Intercept]" "r_user_id[991,Intercept]" "r_user_id[992,Intercept]"## [996] "r_user_id[993,Intercept]" "r_user_id[994,Intercept]" "r_user_id[995,Intercept]" "r_user_id[996,Intercept]" "r_user_id[997,Intercept]"## [ reached getOption("max.print") -- omitted 17918 entries ]Pull random vars

The random effect name is

r_user_idWe use brackets to assign new names

m0_id_re <- gather_draws(m0_b, r_user_id[id, term])m0_id_re## # A tibble: 37,816,000 x 7## # Groups: id, term, .variable [9,454]## id term .chain .iteration .draw .variable .value## <int> <chr> <int> <int> <int> <chr> <dbl>## 1 1 Intercept 1 1 1 r_user_id -0.0826422## 2 1 Intercept 1 2 2 r_user_id -0.567424 ## 3 1 Intercept 1 3 3 r_user_id -0.546465 ## 4 1 Intercept 1 4 4 r_user_id -1.88725 ## 5 1 Intercept 1 5 5 r_user_id 1.12419 ## 6 1 Intercept 1 6 6 r_user_id -2.31118 ## 7 1 Intercept 1 7 7 r_user_id -0.249097 ## 8 1 Intercept 1 8 8 r_user_id -3.61696 ## 9 1 Intercept 1 9 9 r_user_id -1.6264 ## 10 1 Intercept 1 10 10 r_user_id 0.158484 ## # … with 37,815,990 more rowsCompute credible intervals

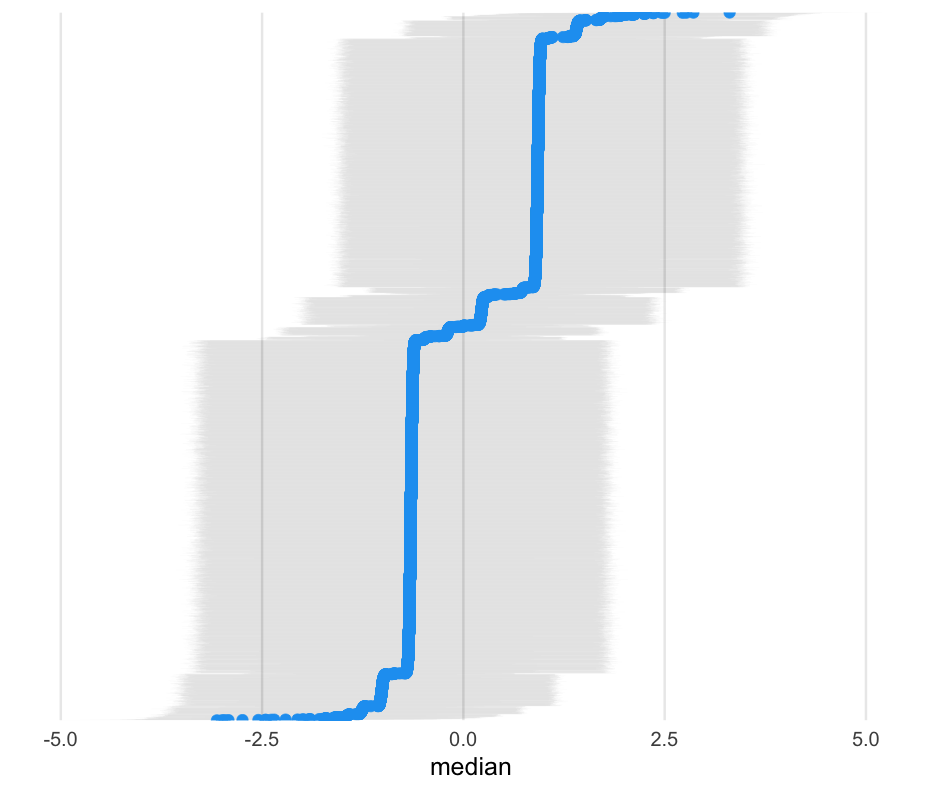

I recognize this part is complex, but you'll have the code for reference

id_qtiles <- m0_id_re %>% group_by(id) %>% summarize( probs = c("median", "lower", "upper"), qtiles = quantile(.value,probs = c(0.5, 0.025, 0.975)) ) %>% ungroup() id_qtiles## # A tibble: 28,362 x 3## id probs qtiles## <int> <chr> <dbl>## 1 1 median -0.6739 ## 2 1 lower -3.31484 ## 3 1 upper 1.746887 ## 4 2 median 0.9492155## 5 2 lower -1.605349 ## 6 2 upper 3.453275 ## 7 3 median -0.648632 ## 8 3 lower -3.341358 ## 9 3 upper 1.803793 ## 10 4 median 0.9214355## # … with 28,352 more rowsMove it wider

id_qtiles <- id_qtiles %>% pivot_wider(names_from = "probs", values_from = "qtiles") %>% mutate(id = fct_reorder(factor(id), median))id_qtiles## # A tibble: 9,454 x 4## id median lower upper## <fct> <dbl> <dbl> <dbl>## 1 1 -0.6739 -3.31484 1.746887## 2 2 0.9492155 -1.605349 3.453275## 3 3 -0.648632 -3.341358 1.803793## 4 4 0.9214355 -1.517492 3.542017## 5 5 -0.626921 -3.264422 1.725803## 6 6 0.930557 -1.599318 3.539588## 7 7 -0.6482415 -3.349034 1.817699## 8 8 -0.6443055 -3.212276 1.697759## 9 9 -0.6500125 -3.371763 1.892287## 10 10 -1.029665 -3.648958 1.221002## # … with 9,444 more rowsm1_b <- brm(is_positive_sentiment ~ trump_in_description + has_antifa_hashtag + log(favorite_count + 1) + (1|user_id), data = blm, family = bernoulli(link = "logit"), cores = 4, backend = "cmdstanr")## -\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\Running MCMC with 4 parallel chains...## ## Chain 3 Iteration: 1 / 2000 [ 0%] (Warmup) ## Chain 4 Iteration: 1 / 2000 [ 0%] (Warmup) ## Chain 1 Iteration: 1 / 2000 [ 0%] (Warmup) ## Chain 2 Iteration: 1 / 2000 [ 0%] (Warmup) ## Chain 3 Iteration: 100 / 2000 [ 5%] (Warmup) ## Chain 1 Iteration: 100 / 2000 [ 5%] (Warmup) ## Chain 2 Iteration: 100 / 2000 [ 5%] (Warmup) ## Chain 4 Iteration: 100 / 2000 [ 5%] (Warmup) ## Chain 3 Iteration: 200 / 2000 [ 10%] (Warmup) ## Chain 1 Iteration: 200 / 2000 [ 10%] (Warmup) ## Chain 2 Iteration: 200 / 2000 [ 10%] (Warmup) ## Chain 4 Iteration: 200 / 2000 [ 10%] (Warmup) ## Chain 3 Iteration: 300 / 2000 [ 15%] (Warmup) ## Chain 1 Iteration: 300 / 2000 [ 15%] (Warmup) ## Chain 2 Iteration: 300 / 2000 [ 15%] (Warmup) ## Chain 4 Iteration: 300 / 2000 [ 15%] (Warmup) ## Chain 3 Iteration: 400 / 2000 [ 20%] (Warmup) ## Chain 1 Iteration: 400 / 2000 [ 20%] (Warmup) ## Chain 2 Iteration: 400 / 2000 [ 20%] (Warmup) ## Chain 4 Iteration: 400 / 2000 [ 20%] (Warmup) ## Chain 3 Iteration: 500 / 2000 [ 25%] (Warmup) ## Chain 2 Iteration: 500 / 2000 [ 25%] (Warmup) ## Chain 1 Iteration: 500 / 2000 [ 25%] (Warmup) ## Chain 4 Iteration: 500 / 2000 [ 25%] (Warmup) ## Chain 3 Iteration: 600 / 2000 [ 30%] (Warmup) ## Chain 2 Iteration: 600 / 2000 [ 30%] (Warmup) ## Chain 1 Iteration: 600 / 2000 [ 30%] (Warmup) ## Chain 4 Iteration: 600 / 2000 [ 30%] (Warmup) ## Chain 3 Iteration: 700 / 2000 [ 35%] (Warmup) ## Chain 2 Iteration: 700 / 2000 [ 35%] (Warmup) ## Chain 1 Iteration: 700 / 2000 [ 35%] (Warmup) ## Chain 4 Iteration: 700 / 2000 [ 35%] (Warmup) ## Chain 3 Iteration: 800 / 2000 [ 40%] (Warmup) ## Chain 2 Iteration: 800 / 2000 [ 40%] (Warmup) ## Chain 4 Iteration: 800 / 2000 [ 40%] (Warmup) ## Chain 1 Iteration: 800 / 2000 [ 40%] (Warmup) ## Chain 3 Iteration: 900 / 2000 [ 45%] (Warmup) ## Chain 2 Iteration: 900 / 2000 [ 45%] (Warmup) ## Chain 1 Iteration: 900 / 2000 [ 45%] (Warmup) ## Chain 4 Iteration: 900 / 2000 [ 45%] (Warmup) ## Chain 3 Iteration: 1000 / 2000 [ 50%] (Warmup) ## Chain 3 Iteration: 1001 / 2000 [ 50%] (Sampling) ## Chain 2 Iteration: 1000 / 2000 [ 50%] (Warmup) ## Chain 2 Iteration: 1001 / 2000 [ 50%] (Sampling) ## Chain 1 Iteration: 1000 / 2000 [ 50%] (Warmup) ## Chain 1 Iteration: 1001 / 2000 [ 50%] (Sampling) ## Chain 4 Iteration: 1000 / 2000 [ 50%] (Warmup) ## Chain 4 Iteration: 1001 / 2000 [ 50%] (Sampling) ## Chain 3 Iteration: 1100 / 2000 [ 55%] (Sampling) ## Chain 2 Iteration: 1100 / 2000 [ 55%] (Sampling) ## Chain 1 Iteration: 1100 / 2000 [ 55%] (Sampling) ## Chain 4 Iteration: 1100 / 2000 [ 55%] (Sampling) ## Chain 3 Iteration: 1200 / 2000 [ 60%] (Sampling) ## Chain 2 Iteration: 1200 / 2000 [ 60%] (Sampling) ## Chain 1 Iteration: 1200 / 2000 [ 60%] (Sampling) ## Chain 4 Iteration: 1200 / 2000 [ 60%] (Sampling) ## Chain 3 Iteration: 1300 / 2000 [ 65%] (Sampling) ## Chain 2 Iteration: 1300 / 2000 [ 65%] (Sampling) ## Chain 1 Iteration: 1300 / 2000 [ 65%] (Sampling) ## Chain 4 Iteration: 1300 / 2000 [ 65%] (Sampling) ## Chain 3 Iteration: 1400 / 2000 [ 70%] (Sampling) ## Chain 2 Iteration: 1400 / 2000 [ 70%] (Sampling) ## Chain 1 Iteration: 1400 / 2000 [ 70%] (Sampling) ## Chain 4 Iteration: 1400 / 2000 [ 70%] (Sampling) ## Chain 3 Iteration: 1500 / 2000 [ 75%] (Sampling) ## Chain 2 Iteration: 1500 / 2000 [ 75%] (Sampling) ## Chain 1 Iteration: 1500 / 2000 [ 75%] (Sampling) ## Chain 4 Iteration: 1500 / 2000 [ 75%] (Sampling) ## Chain 3 Iteration: 1600 / 2000 [ 80%] (Sampling) ## Chain 2 Iteration: 1600 / 2000 [ 80%] (Sampling) ## Chain 1 Iteration: 1600 / 2000 [ 80%] (Sampling) ## Chain 4 Iteration: 1600 / 2000 [ 80%] (Sampling) ## Chain 3 Iteration: 1700 / 2000 [ 85%] (Sampling) ## Chain 2 Iteration: 1700 / 2000 [ 85%] (Sampling) ## Chain 1 Iteration: 1700 / 2000 [ 85%] (Sampling) ## Chain 4 Iteration: 1700 / 2000 [ 85%] (Sampling) ## Chain 3 Iteration: 1800 / 2000 [ 90%] (Sampling) ## Chain 2 Iteration: 1800 / 2000 [ 90%] (Sampling) ## Chain 1 Iteration: 1800 / 2000 [ 90%] (Sampling) ## Chain 4 Iteration: 1800 / 2000 [ 90%] (Sampling) ## Chain 3 Iteration: 1900 / 2000 [ 95%] (Sampling) ## Chain 2 Iteration: 1900 / 2000 [ 95%] (Sampling) ## Chain 1 Iteration: 1900 / 2000 [ 95%] (Sampling) ## Chain 4 Iteration: 1900 / 2000 [ 95%] (Sampling) ## Chain 3 Iteration: 2000 / 2000 [100%] (Sampling) ## Chain 3 finished in 125.6 seconds.## Chain 2 Iteration: 2000 / 2000 [100%] (Sampling) ## Chain 2 finished in 127.9 seconds.## Chain 1 Iteration: 2000 / 2000 [100%] (Sampling) ## Chain 4 Iteration: 2000 / 2000 [100%] (Sampling) ## Chain 1 finished in 128.1 seconds.## Chain 4 finished in 128.1 seconds.## ## All 4 chains finished successfully.## Mean chain execution time: 127.4 seconds.## Total execution time: 128.6 seconds.Summary

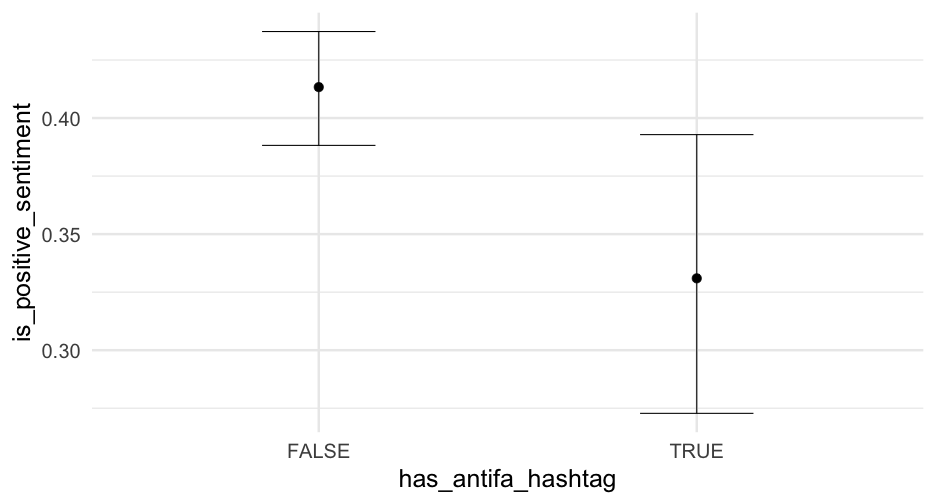

summary(m1_b)## Family: bernoulli ## Links: mu = logit ## Formula: is_positive_sentiment ~ trump_in_description + has_antifa_hashtag + log(favorite_count + 1) + (1 | user_id) ## Data: blm (Number of observations: 14339) ## Samples: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;## total post-warmup samples = 4000## ## Group-Level Effects: ## ~user_id (Number of levels: 9454) ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS## sd(Intercept) 1.51 0.07 1.37 1.65 1.01 663 1341## ## Population-Level Effects: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS## Intercept -0.47 0.03 -0.54 -0.40 1.00 2715 3062## trump_in_descriptionTRUE -0.36 0.19 -0.74 0.01 1.00 2735 2912## has_antifa_hashtagTRUE -0.35 0.13 -0.61 -0.10 1.00 3810 2924## logfavorite_countP1 0.05 0.03 -0.00 0.10 1.00 3507 3361## ## Samples were drawn using sample(hmc). For each parameter, Bulk_ESS## and Tail_ESS are effective sample size measures, and Rhat is the potential## scale reduction factor on split chains (at convergence, Rhat = 1).Posteriors

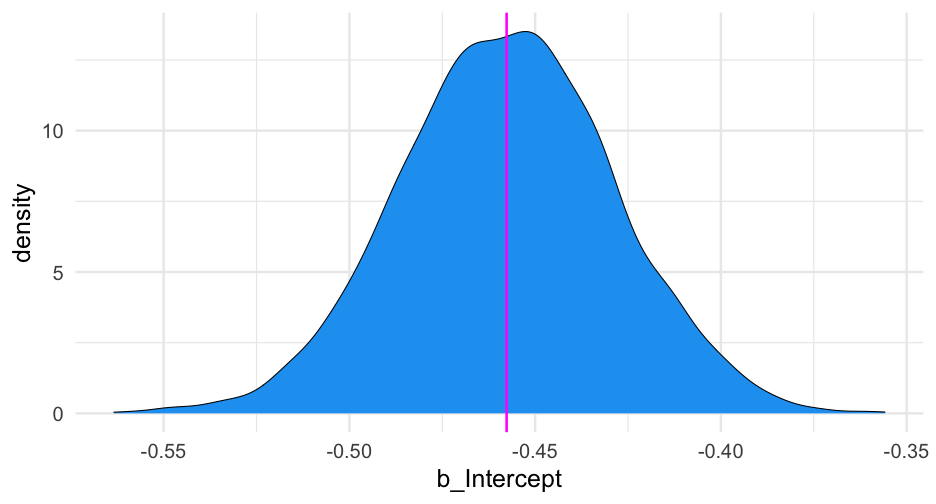

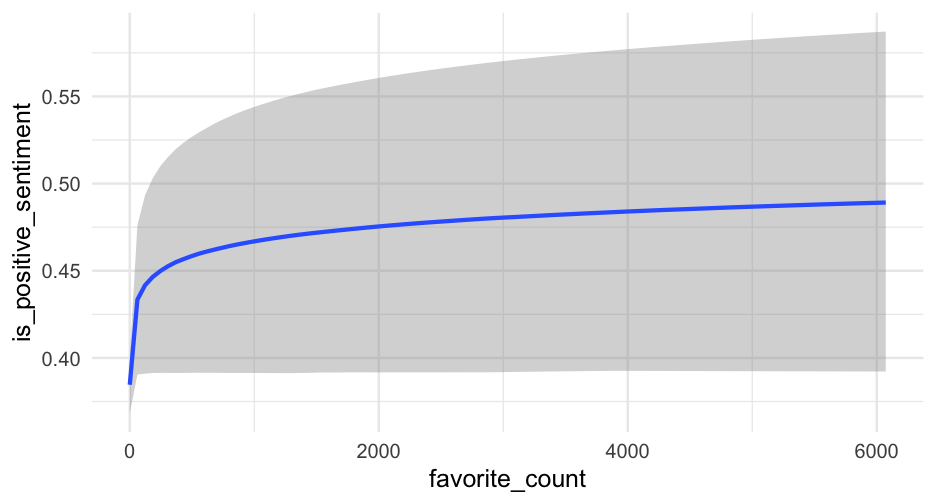

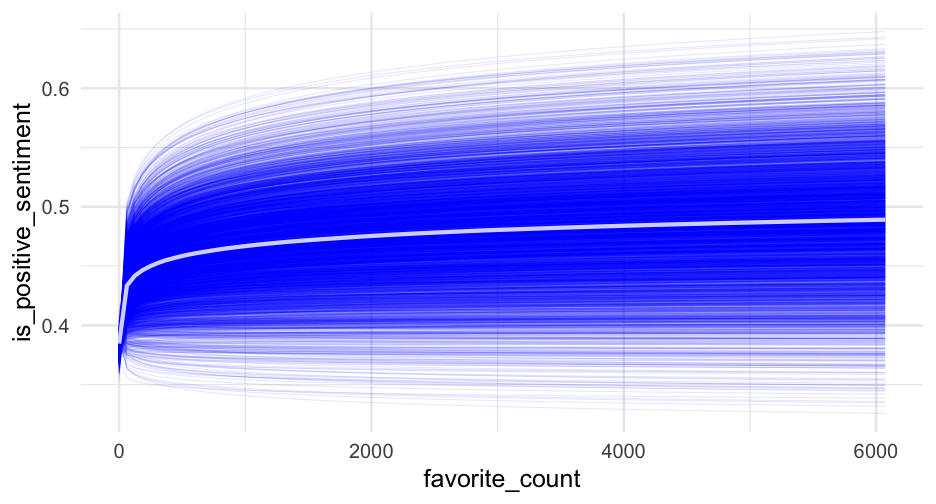

What's the likelihood that our posterior mean for the log of favorite counts is positive?

m1_posterior <- get_parameters(m1_b)sum(m1_posterior$b_logfavorite_countP1 > 0) / nrow(m1_posterior)## [1] 0.9717597% probability! But note - this would probably just miss "significance", with a frequentist approach because of the use of two-tailed tests.

Warnings

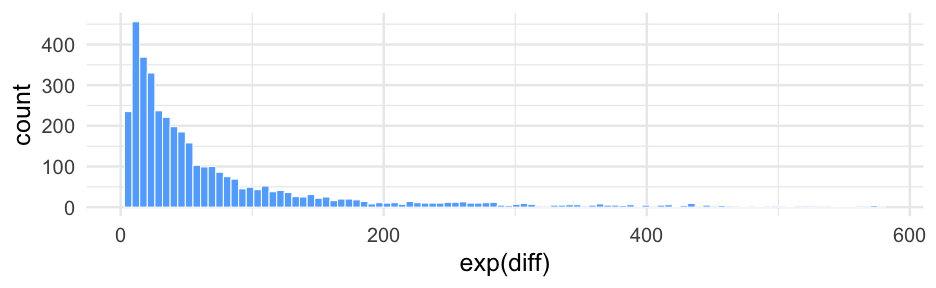

As I was playing around with different models, I sometimes ran into warnings.

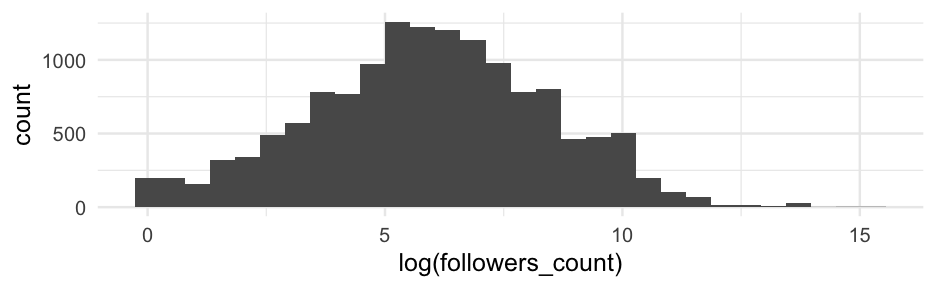

These were easy to solve in this case by just log-transforming the predictor variables that were highly skewed.

For general guidance, see here.

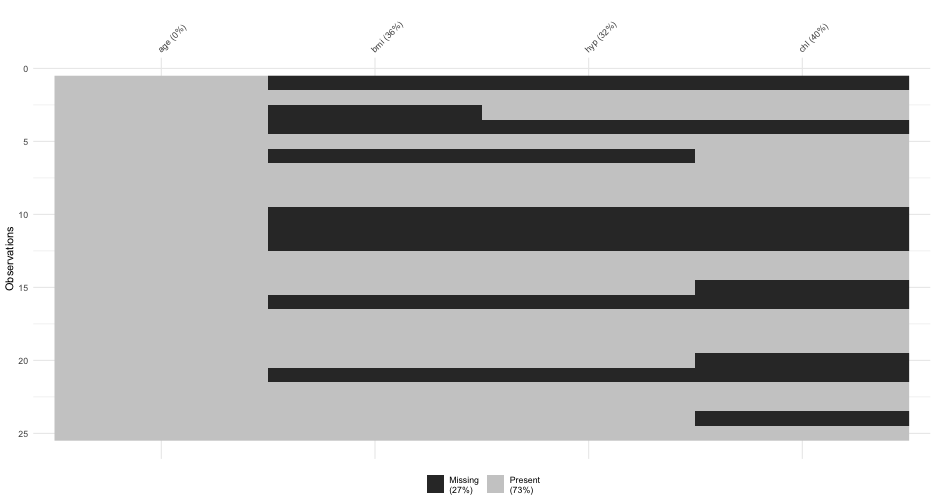

New data

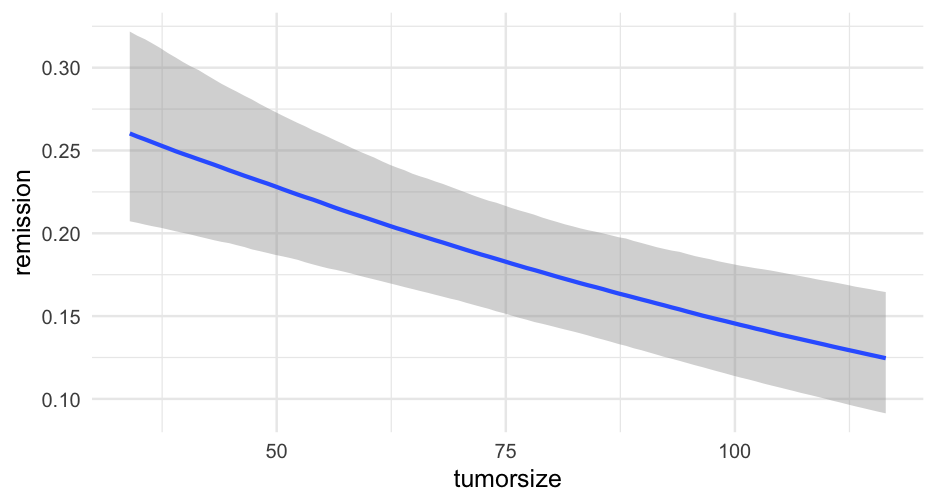

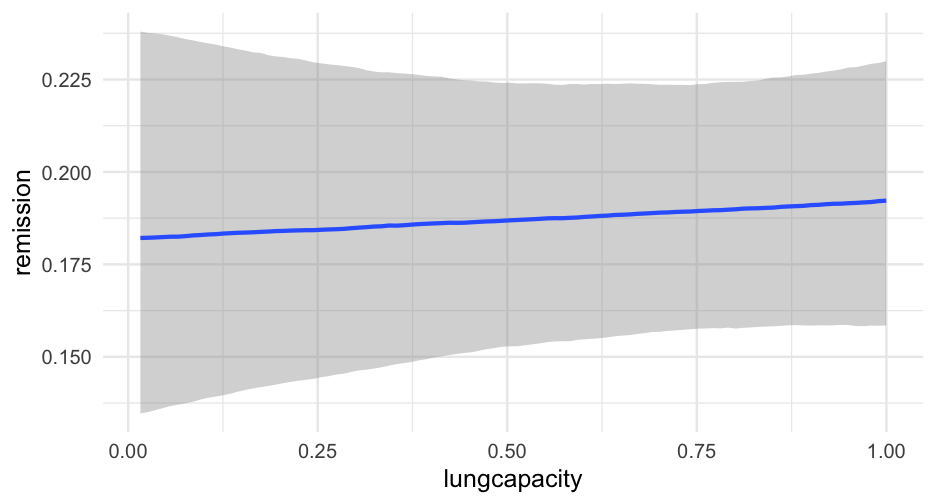

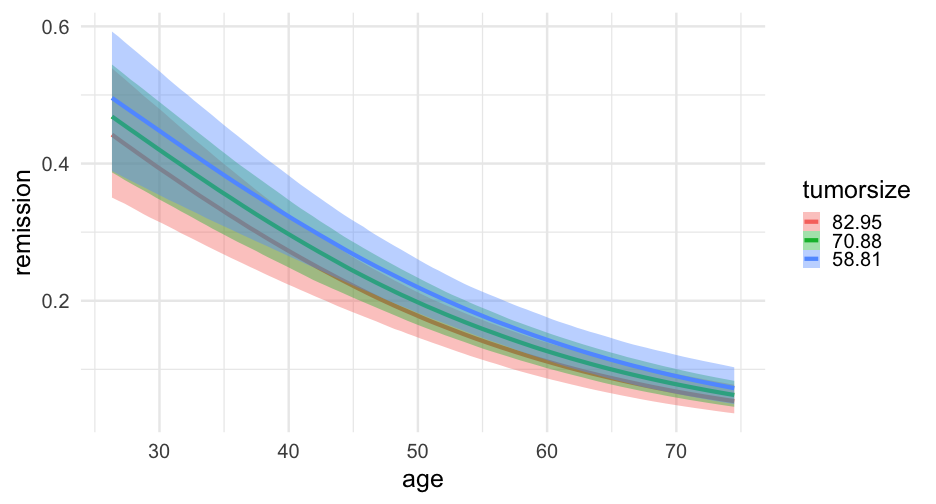

Lung cancer data: Patients nested in doctors

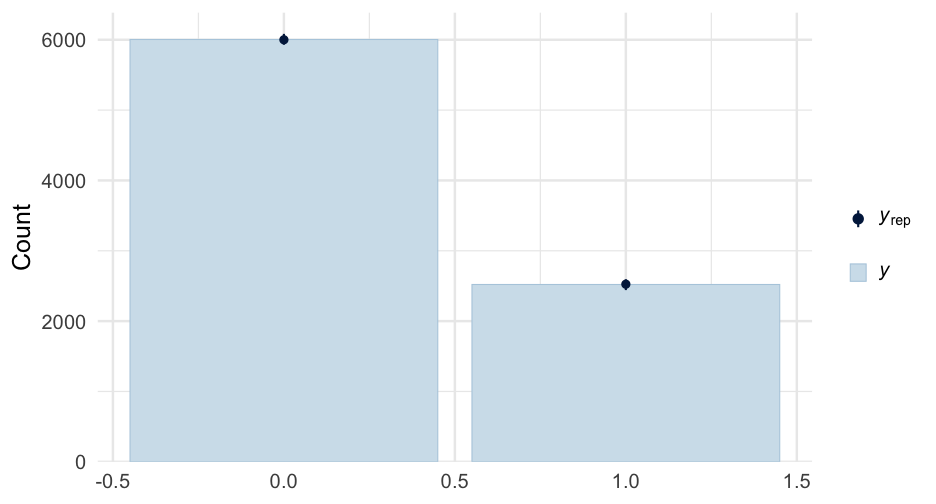

hdp <- read_csv("https://stats.idre.ucla.edu/stat/data/hdp.csv") %>% janitor::clean_names() %>% select(did, tumorsize, pain, lungcapacity, age, remission)hdp## # A tibble: 8,525 x 6## did tumorsize pain lungcapacity age remission## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>## 1 1 67.98120 4 0.8010882 64.96824 0## 2 1 64.70246 2 0.3264440 53.91714 0## 3 1 51.56700 6 0.5650309 53.34730 0## 4 1 86.43799 3 0.8484109 41.36804 0## 5 1 53.40018 3 0.8864910 46.80042 0## 6 1 51.65727 4 0.7010307 51.92936 0## 7 1 78.91707 3 0.8908539 53.82926 0## 8 1 69.83325 3 0.6608795 46.56223 0## 9 1 62.85259 4 0.9088714 54.38936 0## 10 1 71.77790 5 0.9593268 50.54465 0## # … with 8,515 more rowsPredict remission

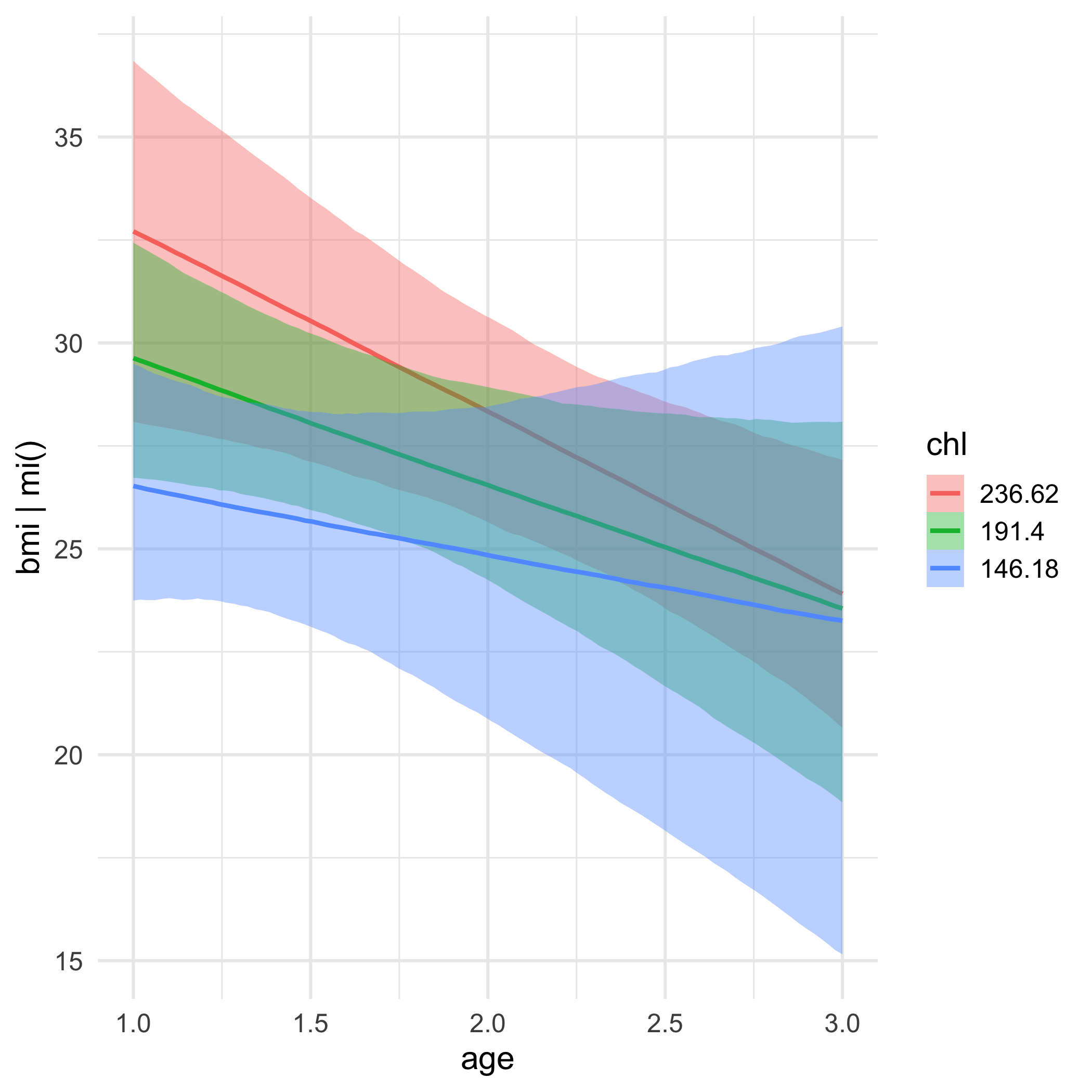

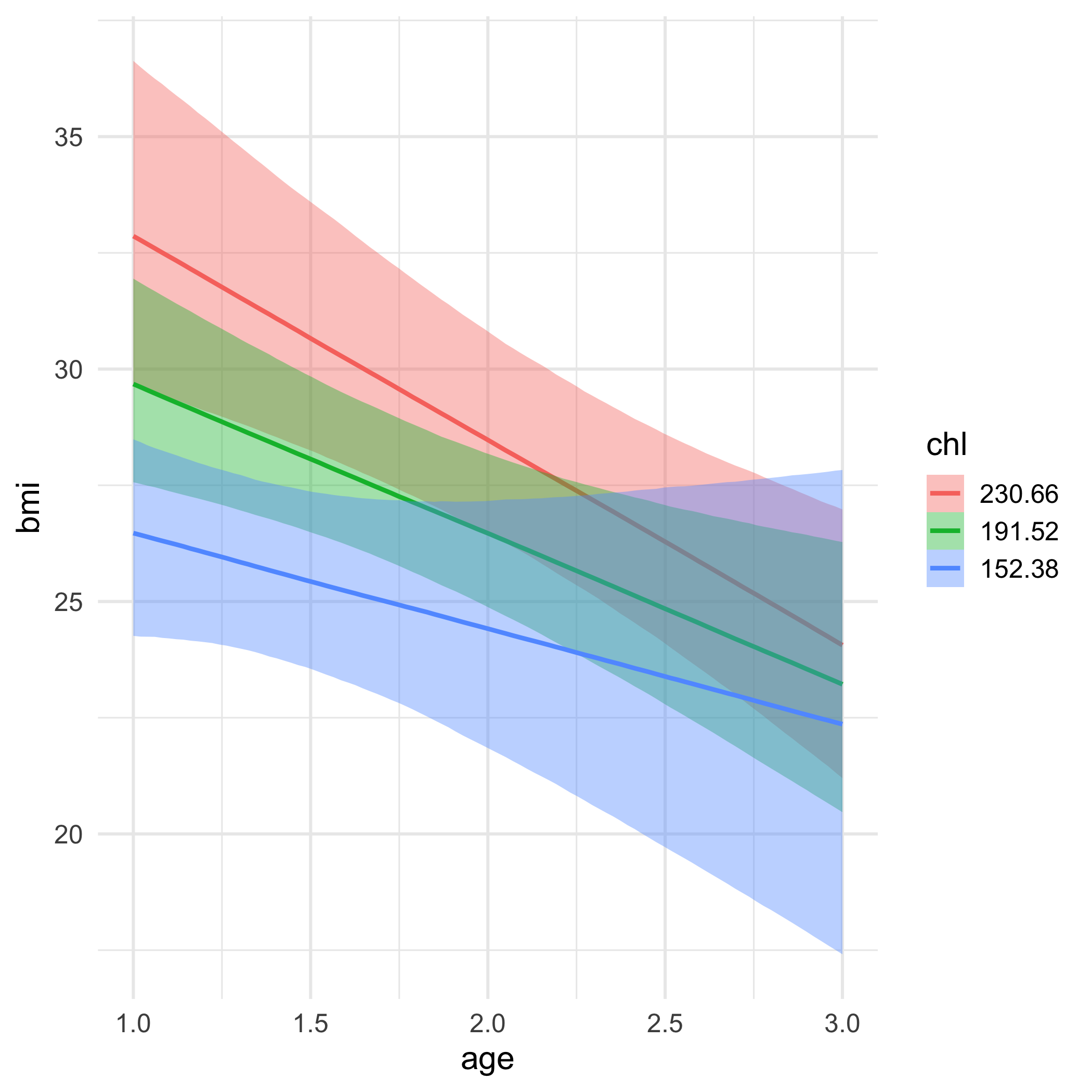

Build a model where age, lung capacity, and tumor size predict whether or not the patient was in remission.

Build the model so you can evaluate whether or not the relation between the tumor size and likelihood of remission depends on age

Allow the intercept to vary by the doctor ID.

Fit the model using brms

05:00

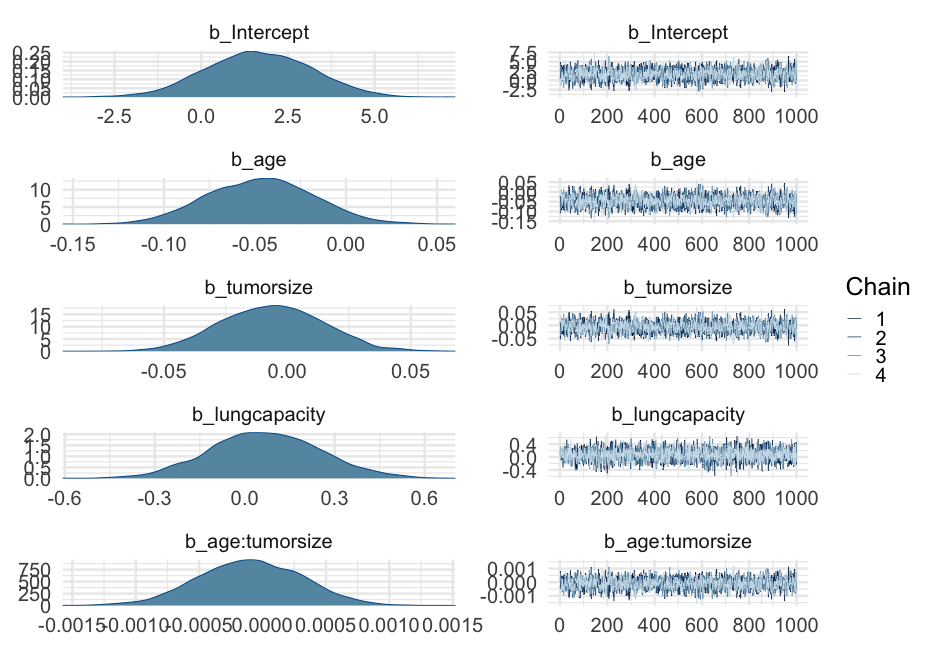

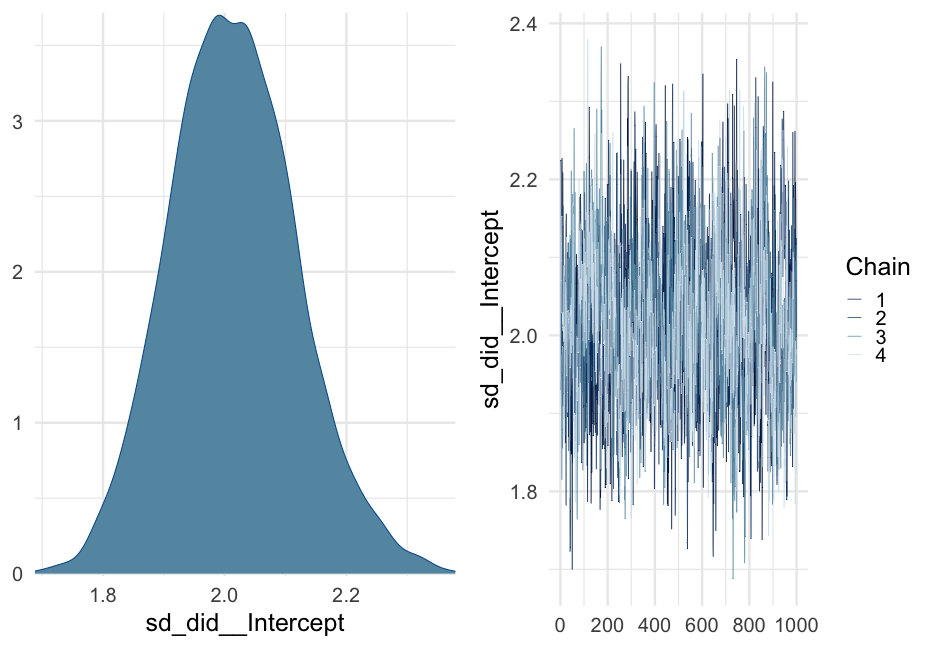

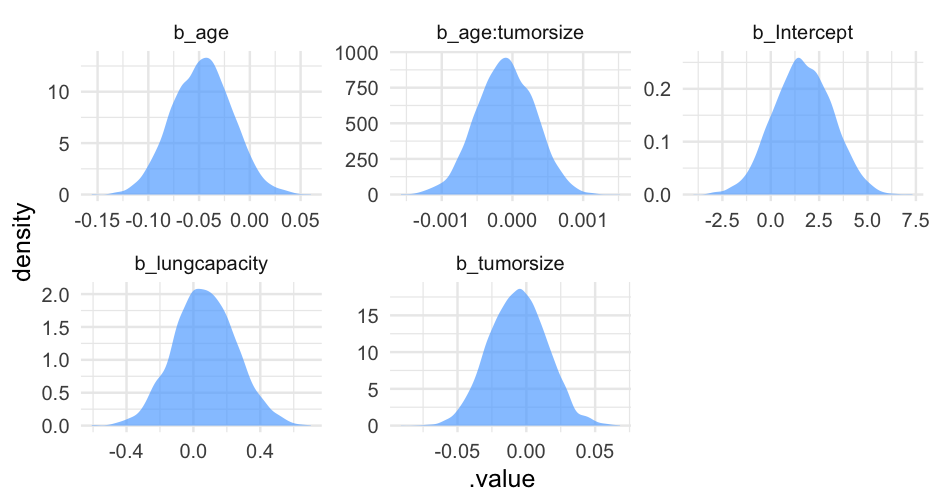

Lung cancer remission model

lc <- brm( remission ~ age * tumorsize + lungcapacity + (1|did), data = hdp, family = bernoulli(link = "logit"), cores = 4, backend = "cmdstan")## -\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\|/-\Running MCMC with 4 parallel chains...## ## Chain 1 Iteration: 1 / 2000 [ 0%] (Warmup) ## Chain 2 Iteration: 1 / 2000 [ 0%] (Warmup) ## Chain 3 Iteration: 1 / 2000 [ 0%] (Warmup) ## Chain 4 Iteration: 1 / 2000 [ 0%] (Warmup) ## Chain 3 Iteration: 100 / 2000 [ 5%] (Warmup) ## Chain 1 Iteration: 100 / 2000 [ 5%] (Warmup) ## Chain 4 Iteration: 100 / 2000 [ 5%] (Warmup) ## Chain 2 Iteration: 100 / 2000 [ 5%] (Warmup) ## Chain 3 Iteration: 200 / 2000 [ 10%] (Warmup) ## Chain 1 Iteration: 200 / 2000 [ 10%] (Warmup) ## Chain 2 Iteration: 200 / 2000 [ 10%] (Warmup) ## Chain 4 Iteration: 200 / 2000 [ 10%] (Warmup) ## Chain 3 Iteration: 300 / 2000 [ 15%] (Warmup) ## Chain 1 Iteration: 300 / 2000 [ 15%] (Warmup) ## Chain 2 Iteration: 300 / 2000 [ 15%] (Warmup) ## Chain 4 Iteration: 300 / 2000 [ 15%] (Warmup) ## Chain 3 Iteration: 400 / 2000 [ 20%] (Warmup) ## Chain 2 Iteration: 400 / 2000 [ 20%] (Warmup) ## Chain 1 Iteration: 400 / 2000 [ 20%] (Warmup) ## Chain 4 Iteration: 400 / 2000 [ 20%] (Warmup) ## Chain 2 Iteration: 500 / 2000 [ 25%] (Warmup) ## Chain 3 Iteration: 500 / 2000 [ 25%] (Warmup) ## Chain 1 Iteration: 500 / 2000 [ 25%] (Warmup) ## Chain 4 Iteration: 500 / 2000 [ 25%] (Warmup) ## Chain 2 Iteration: 600 / 2000 [ 30%] (Warmup) ## Chain 3 Iteration: 600 / 2000 [ 30%] (Warmup) ## Chain 1 Iteration: 600 / 2000 [ 30%] (Warmup) ## Chain 4 Iteration: 600 / 2000 [ 30%] (Warmup) ## Chain 2 Iteration: 700 / 2000 [ 35%] (Warmup) ## Chain 3 Iteration: 700 / 2000 [ 35%] (Warmup) ## Chain 1 Iteration: 700 / 2000 [ 35%] (Warmup) ## Chain 4 Iteration: 700 / 2000 [ 35%] (Warmup) ## Chain 3 Iteration: 800 / 2000 [ 40%] (Warmup) ## Chain 2 Iteration: 800 / 2000 [ 40%] (Warmup) ## Chain 1 Iteration: 800 / 2000 [ 40%] (Warmup) ## Chain 4 Iteration: 800 / 2000 [ 40%] (Warmup) ## Chain 2 Iteration: 900 / 2000 [ 45%] (Warmup) ## Chain 3 Iteration: 900 / 2000 [ 45%] (Warmup) ## Chain 1 Iteration: 900 / 2000 [ 45%] (Warmup) ## Chain 4 Iteration: 900 / 2000 [ 45%] (Warmup) ## Chain 2 Iteration: 1000 / 2000 [ 50%] (Warmup) ## Chain 2 Iteration: 1001 / 2000 [ 50%] (Sampling) ## Chain 3 Iteration: 1000 / 2000 [ 50%] (Warmup) ## Chain 3 Iteration: 1001 / 2000 [ 50%] (Sampling) ## Chain 1 Iteration: 1000 / 2000 [ 50%] (Warmup) ## Chain 1 Iteration: 1001 / 2000 [ 50%] (Sampling) ## Chain 4 Iteration: 1000 / 2000 [ 50%] (Warmup) ## Chain 4 Iteration: 1001 / 2000 [ 50%] (Sampling) ## Chain 3 Iteration: 1100 / 2000 [ 55%] (Sampling) ## Chain 1 Iteration: 1100 / 2000 [ 55%] (Sampling) ## Chain 2 Iteration: 1100 / 2000 [ 55%] (Sampling) ## Chain 4 Iteration: 1100 / 2000 [ 55%] (Sampling) ## Chain 3 Iteration: 1200 / 2000 [ 60%] (Sampling) ## Chain 1 Iteration: 1200 / 2000 [ 60%] (Sampling) ## Chain 4 Iteration: 1200 / 2000 [ 60%] (Sampling) ## Chain 2 Iteration: 1200 / 2000 [ 60%] (Sampling) ## Chain 3 Iteration: 1300 / 2000 [ 65%] (Sampling) ## Chain 1 Iteration: 1300 / 2000 [ 65%] (Sampling) ## Chain 4 Iteration: 1300 / 2000 [ 65%] (Sampling) ## Chain 2 Iteration: 1300 / 2000 [ 65%] (Sampling) ## Chain 3 Iteration: 1400 / 2000 [ 70%] (Sampling) ## Chain 1 Iteration: 1400 / 2000 [ 70%] (Sampling) ## Chain 4 Iteration: 1400 / 2000 [ 70%] (Sampling) ## Chain 2 Iteration: 1400 / 2000 [ 70%] (Sampling) ## Chain 3 Iteration: 1500 / 2000 [ 75%] (Sampling) ## Chain 1 Iteration: 1500 / 2000 [ 75%] (Sampling) ## Chain 4 Iteration: 1500 / 2000 [ 75%] (Sampling) ## Chain 1 Iteration: 1600 / 2000 [ 80%] (Sampling) ## Chain 3 Iteration: 1600 / 2000 [ 80%] (Sampling) ## Chain 2 Iteration: 1500 / 2000 [ 75%] (Sampling) ## Chain 4 Iteration: 1600 / 2000 [ 80%] (Sampling) ## Chain 1 Iteration: 1700 / 2000 [ 85%] (Sampling) ## Chain 3 Iteration: 1700 / 2000 [ 85%] (Sampling) ## Chain 4 Iteration: 1700 / 2000 [ 85%] (Sampling) ## Chain 2 Iteration: 1600 / 2000 [ 80%] (Sampling) ## Chain 1 Iteration: 1800 / 2000 [ 90%] (Sampling) ## Chain 3 Iteration: 1800 / 2000 [ 90%] (Sampling) ## Chain 4 Iteration: 1800 / 2000 [ 90%] (Sampling) ## Chain 2 Iteration: 1700 / 2000 [ 85%] (Sampling) ## Chain 1 Iteration: 1900 / 2000 [ 95%] (Sampling) ## Chain 3 Iteration: 1900 / 2000 [ 95%] (Sampling) ## Chain 4 Iteration: 1900 / 2000 [ 95%] (Sampling) ## Chain 2 Iteration: 1800 / 2000 [ 90%] (Sampling) ## Chain 1 Iteration: 2000 / 2000 [100%] (Sampling) ## Chain 1 finished in 302.2 seconds.## Chain 3 Iteration: 2000 / 2000 [100%] (Sampling) ## Chain 3 finished in 304.3 seconds.## Chain 4 Iteration: 2000 / 2000 [100%] (Sampling) ## Chain 4 finished in 305.6 seconds.## Chain 2 Iteration: 1900 / 2000 [ 95%] (Sampling) ## Chain 2 Iteration: 2000 / 2000 [100%] (Sampling) ## Chain 2 finished in 321.1 seconds.## ## All 4 chains finished successfully.## Mean chain execution time: 308.3 seconds.## Total execution time: 321.6 seconds.Model summary

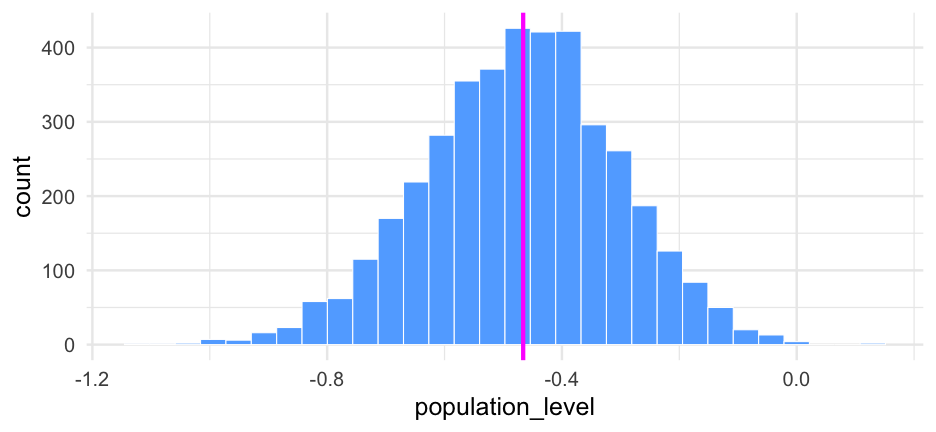

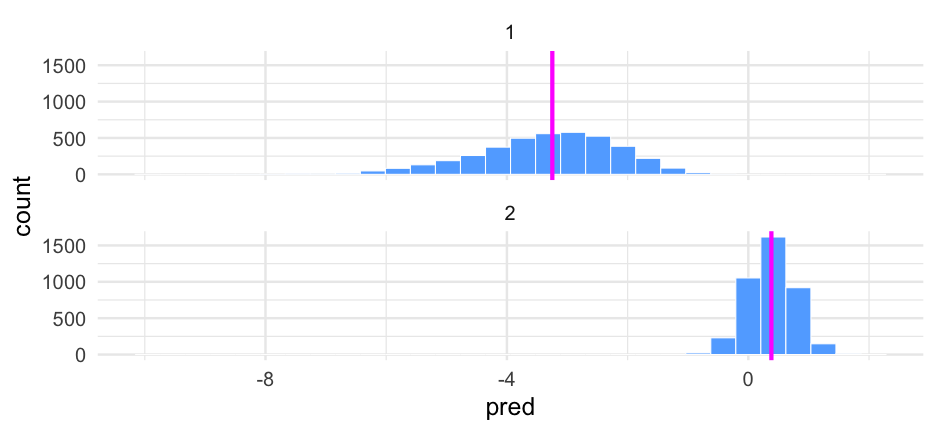

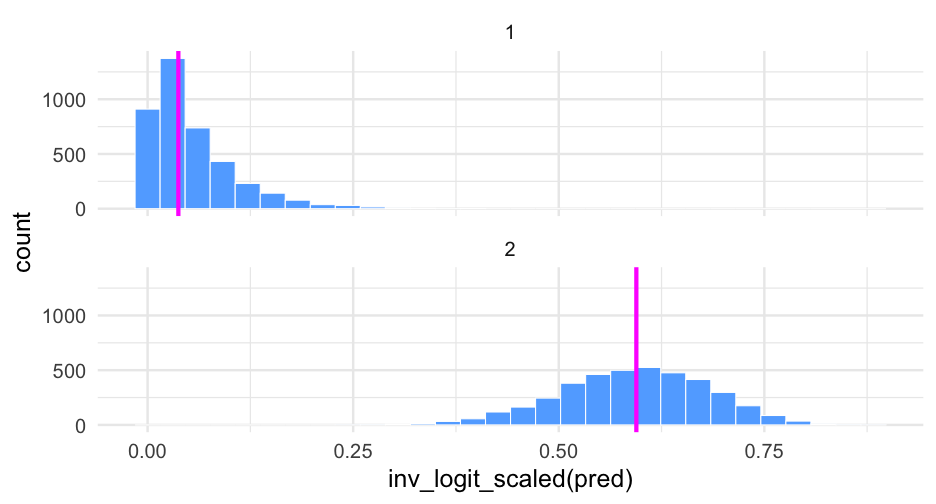

summary(lc)## Family: bernoulli ## Links: mu = logit ## Formula: remission ~ age * tumorsize + lungcapacity + (1 | did) ## Data: hdp (Number of observations: 8525) ## Samples: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;## total post-warmup samples = 4000## ## Group-Level Effects: ## ~did (Number of levels: 407) ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS## sd(Intercept) 2.01 0.10 1.82 2.23 1.00 599 1603## ## Population-Level Effects: ## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS## Intercept 1.66 1.51 -1.31 4.57 1.00 1925 2782## age -0.05 0.03 -0.10 0.01 1.00 1919 2538## tumorsize -0.01 0.02 -0.05 0.03 1.00 1931 2833## lungcapacity 0.07 0.19 -0.29 0.44 1.00 4872 3061## age:tumorsize -0.00 0.00 -0.00 0.00 1.00 1905 2810## ## Samples were drawn using sample(hmc). For each parameter, Bulk_ESS## and Tail_ESS are effective sample size measures, and Rhat is the potential## scale reduction factor on split chains (at convergence, Rhat = 1).pred_tumor## # A tibble: 555,100 x 9## # Groups: age, lungcapacity, tumorsize, did, .row [5,551]## age lungcapacity tumorsize did .row .chain .iteration .draw .value## <int> <dbl> <int> <dbl> <int> <int> <int> <int> <dbl>## 1 20 0.7740865 30 -999 1 NA NA 22 0.8898250## 2 20 0.7740865 30 -999 1 NA NA 167 0.1359217## 3 20 0.7740865 30 -999 1 NA NA 296 0.4548241## 4 20 0.7740865 30 -999 1 NA NA 327 0.9331879## 5 20 0.7740865 30 -999 1 NA NA 371 0.3171560## 6 20 0.7740865 30 -999 1 NA NA 392 0.9858511## 7 20 0.7740865 30 -999 1 NA NA 446 0.9968882## 8 20 0.7740865 30 -999 1 NA NA 461 0.3545641## 9 20 0.7740865 30 -999 1 NA NA 555 0.8710298## 10 20 0.7740865 30 -999 1 NA NA 559 0.7410803## # … with 555,090 more rowsVariance by Doctor

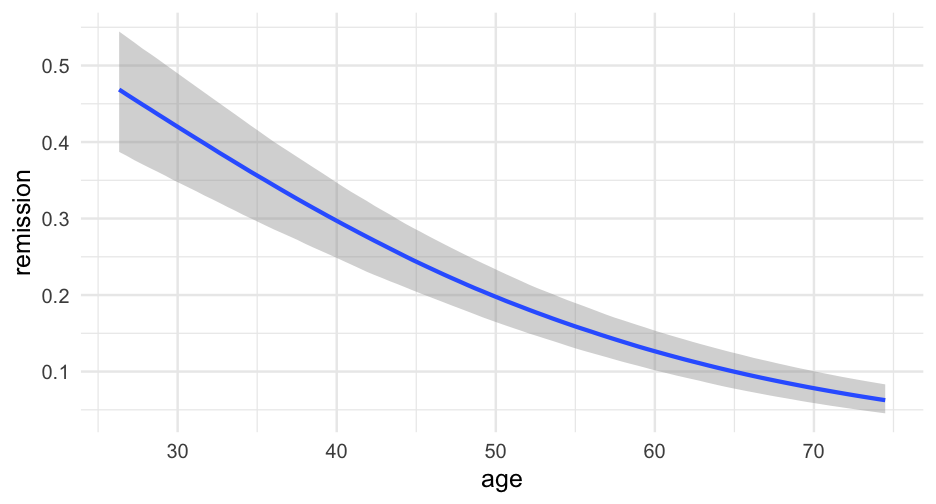

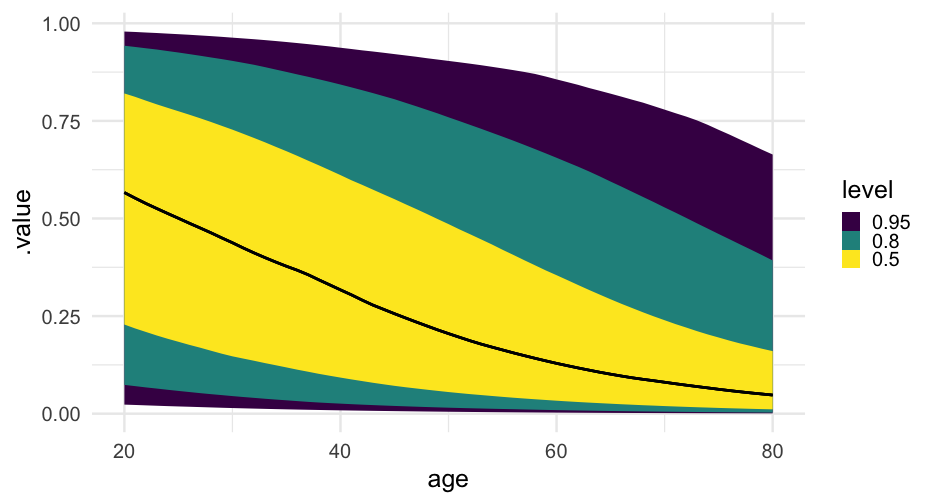

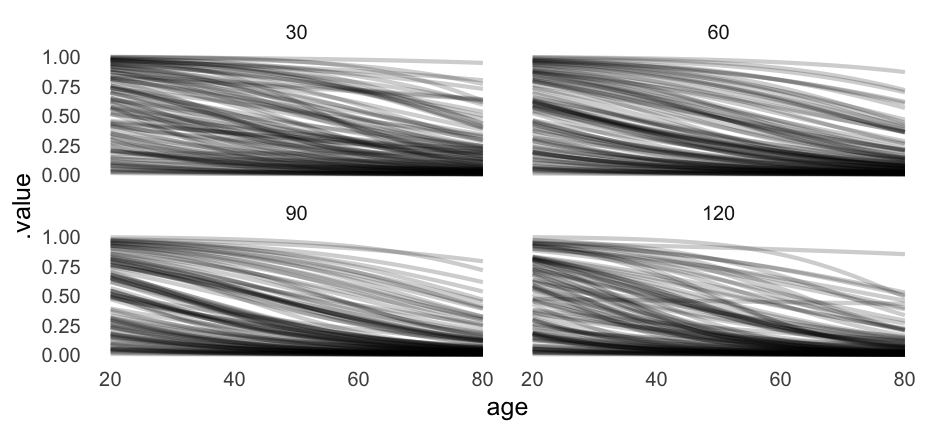

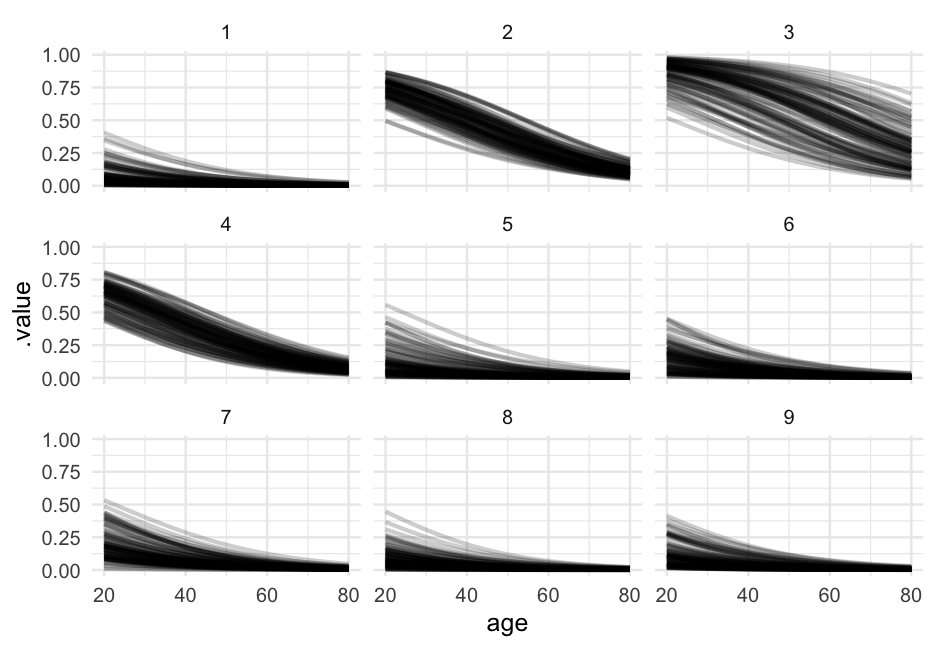

Let's look at the relation between age and proability of remission for each of the first nine doctors.